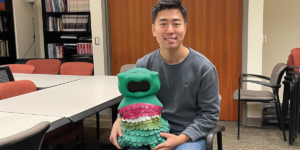

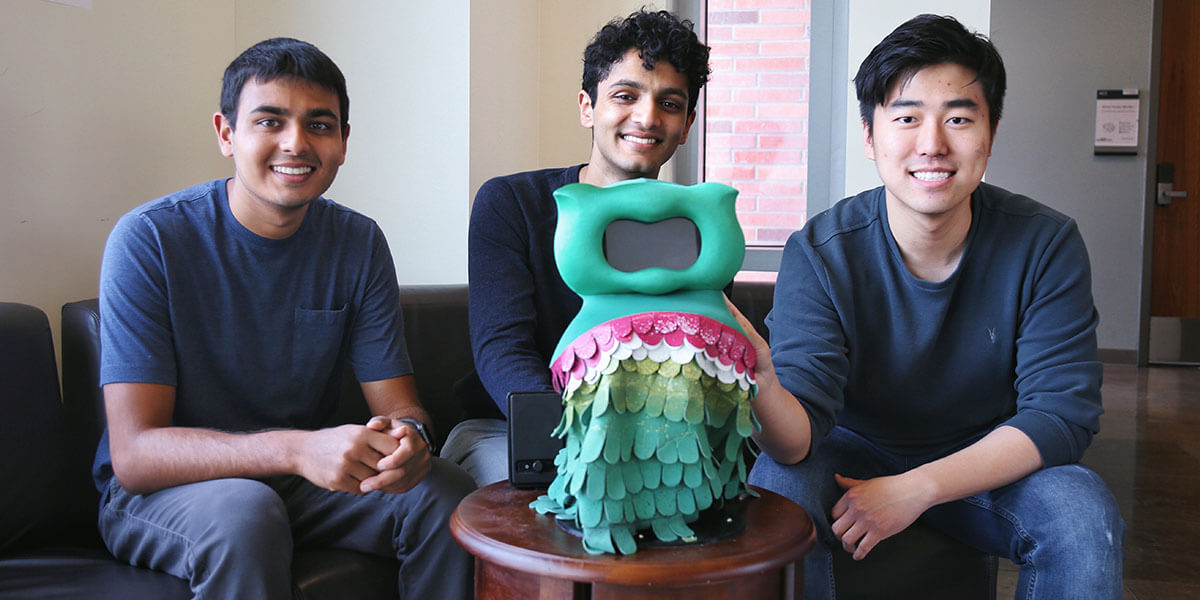

(Left – right) Lead author Shomik Jain with co-authors Kartik Mahajan and Zhonghao Shi, all students in Professor Maja Matarić’s Interaction Lab, with Kiwi the socially assistive robot. Photo/Haotian Mai.

Many children with autism face developmental delays, including communication and behavioral challenges and difficulties with social interaction. This makes learning new skills a major challenge, especially in traditional school environments.

Previous research suggests socially assistive robots can help children with autism learn. But these therapeutic interventions work best if the robot can accurately interpret the child’s behavior and react appropriately.

Now, researchers at USC’s Department of Computer Science have developed personalized learning robots for children with autism. They also studied whether the robots could estimate a child’s interest in a task using machine learning.

In one of the largest studies of its kind, the researchers placed a socially assistive robot in the homes of 17 children with autism for one month. The robots personalized their instruction and feedback to each child’s unique learning patterns during the interventions.

After the study was completed, the researchers also analyzed seven of the participants’ engagement and determined the robot could have autonomously detected whether or not the child was engaged with 90% accuracy. The results of the experiments were published in the “Frontiers in Robotics and AI” and “Science Robotics,” journals on Nov. 6 and Feb. 26, respectively.

Making robots smarter

Robots are limited in their ability to autonomously recognize and respond to behavioral cues, especially in atypical users and real-world environments. This study is the first to model the learning patterns and engagement of children with autism in a long-term, in-home setting.

“Current robotic systems are very rigid,” said lead author Shomik Jain, a progressive degree mathematics student advised by socially assistive robotics pioneer Professor Maja Matarić.

“If you think of a real learning environment, the teacher is going to learn things about the child, and the child will learn things from them. It’s a bidirectional process and that doesn’t happen with current robotic systems. This study aims to make robots smarter by understanding the child’s behavior and responding to it in real- time.”

The researchers stress the goal is to augment human therapy, not replace it.

“Human therapists are crucial, but they may not always be available or affordable for families,” said Kartik Mahajan, an undergraduate student in computer science and study co-author. “That’s where socially assistive robots like this come in.”

Above: the study advances in-home robots that adapt to the individual needs of children with autism. Video/National Science Foundation.

Enhancing the learning experience

Funded by a multi-university National Science Foundation (NSF) Expeditions in Computing grant lead at USC by Matarić, with Gigi Ragusa, a USC Viterbi professor of engineering education and co-PI who provided key domain expertise, the research team placed Kiwi the robot in the homes of 17 children with autism. The child participants, aged between 3 and 7, and their families were recruited, screened and assessed by Ragusa from the greater Los Angeles area.

During almost daily interventions, the children played space-themed math games on a tablet while Kiwi, a 2-foot tall robot dressed like a green feathered bird, provided instruction and feedback.

Kiwi’s feedback and the games’ difficulty were personalized in real-time according to each child’s unique learning patterns. Matarić’s team in the USC Interaction Lab accomplished this using reinforcement learning, a rapidly growing subfield of artificial intelligence (AI).

The algorithms monitored the child’s performance on the math games. For instance, if a child answered correctly, Kiwi would say something like, “Good job!”. If they got a question wrong, Kiwi might give them some helpful tips to solve the problem, and adjust the difficulty and feedback in future games. The goal was to maximize difficulty, while also not pushing the learner to make too many mistakes.

“If you have no idea what the child’s ability level is, you just throw a bunch of varying problems at them and it’s not good for their engagement or learning,” said Jain.

“But if the robot is able to find an appropriate level of difficulty for the problems, then that can really enhance the learning experience.”

The ultimate frontier

There’s a popular saying among people with autism and their families: If you have met one person with autism, you have met one person with autism.

“Autism is the ultimate frontier for robotic personalization, because as anyone who knows about autism will tell you, every individual has a constellation of symptoms and different severities of each symptom,” said Matarić, Chan Soon-Shiong Distinguished Professor of Computer Science, Neuroscience, and Pediatrics and Interim Vice President of Research.

This presents a particular challenge for machine learning, which usually relies on spotting consistent patterns in huge amounts of similar data. That’s why personalization is so important.

“If we take a cue from a child, we can achieve so much more than just following a script,” said Matarić. “Normal AI approaches fail with autism. AI methods require a lot of similar data and that just isn’t possible with autism, where heterogeneity reigns.”

The researchers tackled this problem in their analysis of the children’s engagement after the intervention. Computer models of engagement were developed by combining many types of data, including eye gaze and head pose, audio pitch and frequency, and performance on the task.

Making these algorithms work using real-world data presented a major challenge, given the accompanying noise and unpredictability.

“This experiment was right in the center of their learning experience,” said Kartik, who helped install the robots in the children’s homes.

“There were cats jumping on the robot, a blender going off in the kitchen, and people coming in and out of the room.” As such, the machine learning algorithms had to be sophisticated enough to focus on pertinent information related to the therapy session and dismiss environmental “noise.”

Improving human-robot interaction

Assessments were conducted before and after the month-long interventions. While the researchers expected to see some improvements in participants, the results surpassed their expectations. At the end of the month’s intervention, 100% of the participants demonstrated improved math skills, while 92% also improved in social skills, according to Ragusa, who conducted all child assessments for the research.

In post-experiment analyses, the researchers were also able to glean some other interesting information from the data that could give us a peek into the recipe for ideal child-robot interactions.

The study observed higher engagement for all participants shortly after the robot had spoken. Specifically, participants were engaged about 70% of the time when the robot had spoken in the previous minute, but less than 50% of the time when the robot had not spoken for more than a minute.

While a personalized model for every user is ideal, the researchers also determined it was possible to achieve adequate results using engagement models trained on data from other users.

Moreover, the study observed caregivers only had to intervene when a child lost interest for a longer period of time. In contrast, participants usually re-engaged by themselves after shorter periods of disinterest. This suggests robotic systems should focus on counteracting longer periods of disengagement.

The research team will continue to study the data gathered from the experiment: One active sub-project involves analyzing and modeling the children’s cognitive-affective states, including emotions such as confusion or excitement. The project, led by progressive degree in computer science student Zhonghao Shi, aims to design affect-aware socially assistive robot tutors that are even more sensitive to the emotions and moods of its users in the context of learning.

“The hope is that future studies in this lab and elsewhere can take all the things that we’ve learned and hopefully design more engaging and personalized human-robot interactions,” said Jain.

Published on February 26th, 2020

Last updated on March 11th, 2020