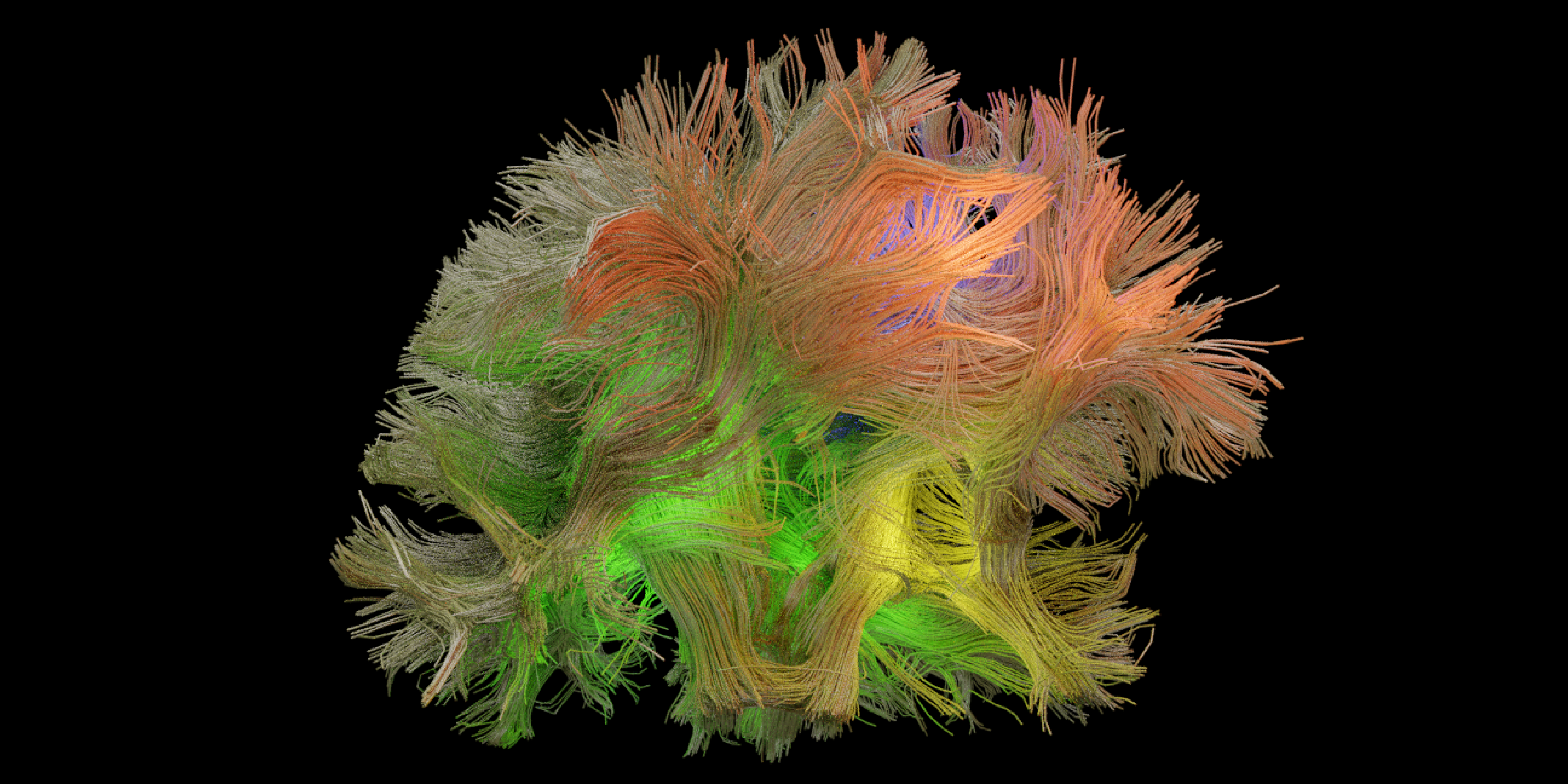

Brain with its fibers showing its immense complexity. The new machine learning method can isolate patterns in brain signals that relate to a specific behavior and decode the behavior much better than previous models/Credit: Omid Sani & Maryam Shanechi, Shanechi Lab

At any given moment in time, our brain is involved in various activities. For example, when typing on a keyboard, our brain not only dictates our finger movements but also how thirsty we feel at that time. As a result, brain signals contain dynamic neural patterns that reflect a combination of these activities simultaneously. A standing challenge has been isolating those patterns in brain signals that relate to a specific behavior, such as finger movements. Further, developing brain-machine interfaces (BMIs) that help people with neurological and mental disorders requires the translation of brain signals into a specific behavior, a problem called decoding. This decoding also depends on our ability to isolate neural patterns related to specific behaviors. These neural patterns can be masked by patterns related to other activities and can be missed by standard algorithms.

Led by Maryam Shanechi, Assistant Professor and Viterbi Early Career Chair in Electrical and Computer Engineering at the USC Viterbi School of Engineering, researchers have developed a machine learning algorithm that resolved the above challenge. The algorithm published in Nature Neuroscience uncovered neural patterns missed by other methods and enhanced the decoding of behaviors that originated from signals in the brain. This algorithm is a significant advance in modeling and decoding of complex brain activity which could both enable new neuroscience discoveries and enhance future brain-machine interfaces.

Standard algorithms, says Shanechi, can miss some neural patterns related to a given behavior that are masked by patterns related to other functions happening simultaneously. Shanechi and her PhD student Omid Sani developed a machine learning algorithm to resolve this challenge.

Shanechi, the paper’s lead senior author says, “We have developed an algorithm that, for the first time, can dissociate the dynamic patterns in brain signals that relate to specific behaviors one is interested in. Our algorithm was also much better at decoding these behaviors from the brain signals.”

The researchers showed that their machine learning algorithm can find neural patterns that are missed by other methods. This was because unlike prior methods which only consider brain signals when searching for neural patterns, the new algorithm has the ability to consider both brain signals and the behavioral signals such as the speed of arm movements. In doing so, says Sani, the study’s first author, the algorithm discovered the common patterns between the brain and behavioral signals and was also much better able to decode the behavior represented by brain signals. More generally, he adds, the algorithm can model common dynamic patterns between any signals for example, between the signals from different brain regions or signals in other fields beyond neuroscience.

To test the new algorithm, the study’s authors, which include Shanechi’s PhD students Omid Sani and Hamidreza Abbaspourazad, as well as Bijan Pesaran, Professor of Neural Science at NYU and Yan Wong, a former Post-Doc at NYU, relied on four existing datasets collected in the Pesaran Lab. The datasets were based on recorded changes in the neural activity during the performance of different arm and eye movement tasks.

In the future, this new algorithm could be used to develop enhanced brain-machine interfaces that help paralyzed patients by significantly improving the decoding of movement or speech generated by brain signals and thus translating these signals into a specific, desired behaviors such as body movements. This could allow a paralyzed patient to move a robotic arm by merely thinking about the movement or generate speech by just thinking about it. In addition, this algorithm could help patients with intractable mental health conditions such as major depression by separating brain signals related to mood symptoms and allowing for real-time tracking of these symptoms (which is outlined in previous studies Shanechi completed). The tracked symptom could then be used as feedback to tailor a therapy to a patient’s needs.

Shanechi adds, “By isolating dynamic neural patterns relevant to different brain functions, this machine learning algorithm can help us investigate basic questions about brain’s functions and develop enhanced brain-machine interfaces to restore lost function in neurological and mental disorders.”

This research was primarily funded by the Army Research Office as part of the collaboration between the US Department of Defense, the UK MOD and the UK Engineering and Physical Research Council under the Multidisciplinary University Research Initiative (MURI) led by Shanechi. The research was also supported by the Office of Naval Research (ONR) Young Investigator Program, National Science Foundation CAREER Award and US National Institutes of Health.

Published on November 9th, 2020

Last updated on November 9th, 2020