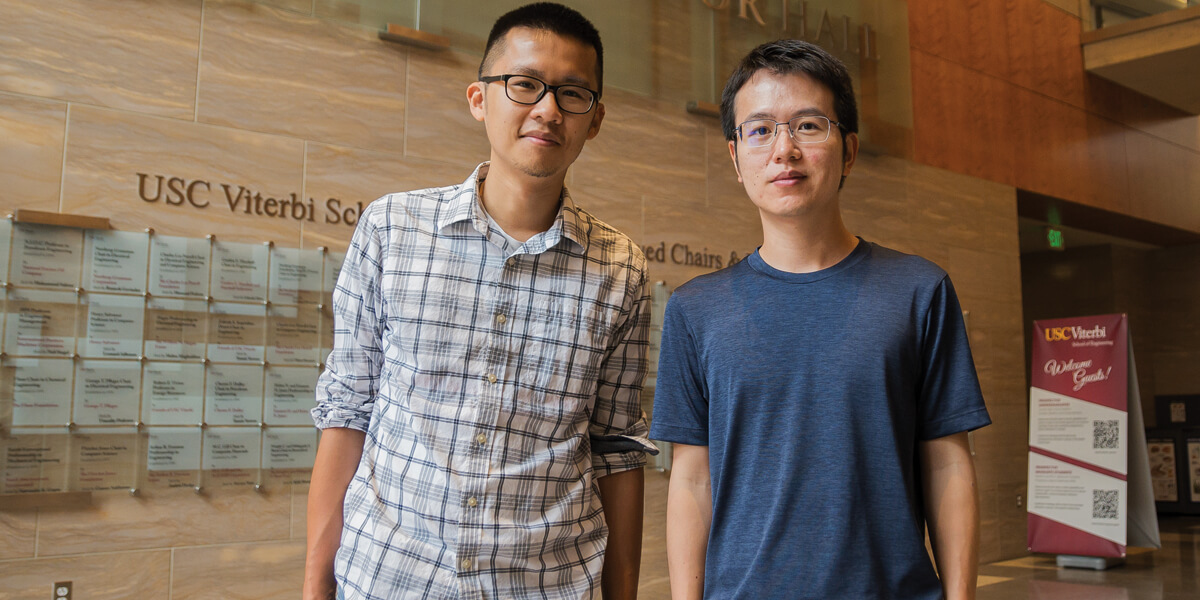

Professor Haipeng Luo (right) and Ph.D. student Chen-Yu Wei won best paper award at the Annual Conference on Learning Theory (COLT). Photo/Aaron John Balana.

Haipeng Luo, an assistant professor of computer science, and Chen-Yu Wei, a Ph.D. student, have received a best paper award for research presented at the Annual Conference on Learning Theory (COLT) 2021, the flagship conference on machine learning theory. Their paper, titled “Non-stationary Reinforcement Learning without Prior Knowledge: An Optimal Black-box Approach,” outlines a simple approach that solves a complex problem in reinforcement learning (RL): applying RL algorithms to non-stationary environments.

This significantly advances the state-of-the-art for RL, and more generally the field of AI, given that RL is often a key component of an AI system.” Haipeng Luo.

RL allows an AI system to improve its strategy over time by interacting with the environment and learning from feedback in a game-like situation. The computer uses trial and error to come up with a solution to the problem, getting either rewards or penalties for the actions it performs. The model has to figure out for itself how to maximize the reward, without human input.

Recently, powerful new computational technologies have opened the way to new inspiring applications for RL, including autonomous robots, self-driving cars, recommendation systems, and industrial automation. But applying RL to real-world systems faces many critical challenges. One key problem: to date, most RL’s successes have been limited to “stationary” environments, such as solving a fixed game like Go, which assumes the environment’s rules will not change over time.

Luo and Wei propose a black-box approach that turns any stationary RL algorithm into another algorithm capable of optimally learning in a non-stationary environment. Photo/Aaron John Balana.

Of course, in the real world, RL algorithms must be able to learn in a constantly changing, dynamic environment. Take, for instance, self-driving cars on the road. In an ideal situation using RL, the computer would receive no overt instruction on driving the car, as the programmer cannot predict everything that happens on the road. But to do so, the RL algorithms must be able to function in a moving environment, something current models have struggled with.

Luo and Wei made a breakthrough in this direction by proposing a black-box approach that turns any stationary RL algorithm into another algorithm capable of optimally learning in a non-stationary environment.

“We detect the environment changes as quickly as possible, while still maintaining a good balance between exploration and exploitation, especially when the system has no prior knowledge on how non-stationary the environment will be,” said Luo, who is recognized for developing more adaptive and efficient machine learning algorithms with strong theoretical guarantees.

“We detect the environment changes as quickly as possible, while still maintaining a good balance between exploration and exploitation.” Haipeng Luo.

“Since the algorithm optimally deals with different degrees of non-stationarity, it outperforms all previous algorithms that either only learn in stationary environments or only allow a certain fixed degree of non-stationarity. This significantly advances the state-of-the-art for RL, and more generally the field of AI, given that RL is often a key component of an AI system.”

One important advantage of this method: its simple “black box” approach makes it easy to use and modular.

“In other words, for any RL applications, one can simply wrap their existing algorithm in our black-box without changing its internal codes, and expect the performance of the system to improve especially when it runs in a non-stationary environment,” said Luo.

Published on September 15th, 2021

Last updated on September 15th, 2021