USC AT ICLR 2022

Building on an impressive track record of leading-edge AI research, computer scientists from USC Viterbi’s Department of Computer Science, the Information Sciences Institute and the Ming Hsieh Department of Electrical and Computer Engineering presented 10 papers at the 10th International Conference on Learning Representations (ICLR 2022) April 25-29 2022.

This year, participants from around the world gathered virtually to share their work, from advancing artificial intelligence to data science, machine vision and robotics. In addition to USC’s research contributions, Yan Liu, a USC professor of computer science, electrical and computer engineering, and biomedical sciences, served as senior program chair of this year’s event. The conference reported a total of 3,391 papers submitted and 1,095 accepted.

USC Papers

“Know Your Action Set: Learning Action Relations for Reinforcement Learning” Ayush Jain, Norio Kosaka, Kyung-Min Kim, Joseph J Lim

In this paper, the team aims to build agents that can solve tasks in diverse environments. For example, household robots must flexibly utilize any toolkit, and recommender agents must suggest items from ever-changing inventories. To this end, the researchers’ work addresses the problem of enabling agents to make decisions from different sets of actions, such as selecting a tool or recommending an item.

“For instance, imagine a robot is asked to hang a picture on the wall with certain tools,” said lead author, USC computer science PhD student Ayush Jian. “It could first select and place the nail on the wall and then use a hammer to push it in. However, in the absence of a hammer, the nail is not a useful action. Instead, the agent must adapt its strategy to other tools, like a screw and a drill. This motivates our key insight: an intelligent agent must make decisions based on how its available action choices could relate to each other.”

By knowing the action set required, their approach can solve a tool reasoning task with various toolkits and a real-world recommendation task with different item inventories.

“Skill-based Meta-Reinforcement Learning” Taewook Nam, Shao-Hua Sun, Karl Pertsch, Sung Ju Hwang, Joseph J Lim

Learning efficiency is a key objective when training artificial agents to learn new tasks. “In this paper, we aim to develop agents that can acquire new behaviors with as few practice trials as possible,” said study co-author Karl Pertsch, a USC computer science PhD student. Recently, a line of research called meta-reinforcement learning made considerable progress towards this end by “learning how to learn,” or practicing the process of mastering a new task itself to speed up learning on a later task.

This earlier practicing phase is known as the meta-training phase. “While meta-training has produced agents that can learn new tasks with only a handful of trials, those agents have so far been limited to very simple, short-horizon tasks like picking up blocks or inserting plugs into sockets,” said Pertsch. A key problem: these prior approaches start meta-training from scratch without any prior knowledge and struggle to learn any more complex tasks.

In contrast, humans can rely on a rich repertoire of prior skills, which they can quickly recombine to solve new tasks. In this work, the researchers borrow from this intuition by extracting a large set of skills from offline agent experience collected across many prior tasks. They then adapt previous meta-reinforcement learning approaches to leverage this skillset during meta-training, enabling them to learn substantially more complex tasks while retaining the learning speed of prior work.

For example, in their experiments, the researchers show that they can train a simulated robotic arm to solve long-horizon kitchen manipulation tasks, such as sequentially opening a cupboard, placing a kettle on the stove, and then turning on the stove within only 20 practice trials.

A simulated robotic arm trained to solve long-horizon kitchen manipulation tasks.

“Mention Memory: incorporating textual knowledge into Transformers through entity mention attention” Michiel de Jong, Yury Zemlyanskiy, Nicholas FitzGerald, Fei Sha, William W. Cohen

Natural language understanding tasks such as open-domain question answering (QA) often require retrieving and assimilating factual information from multiple sources. “Machine learning models often need a lot of outside knowledge to perform language tasks like question answering,” said Michiel de Jong, a computer science PhD student. “Mention Memory compresses information in Wikipedia into a memory of entity mentions, which are cheap to access for a Transformer language model. Using this memory, a Transformer model can retrieve many pieces of information at the same time and reason over them jointly, which is too expensive for existing methods.”

One potential application: asking complicated questions that require combining information or multiple steps of reasoning in a search engine or to an assistant. For instance, said de Jong, answering the question “What role is the Commissioner from Batman best known for?”

“Possibility Before Utility: Learning And Using Hierarchical Affordances” Robby Costales, Shariq Iqbal, Fei Sha

In this work, the researchers endow artificial agents with an ability to explore their high-level action spaces more effectively by leveraging a learned model of hierarchical affordances, representing which behaviors are currently possible in the environment.

“We demonstrate that our method can more effectively solve tasks with complex hierarchical dependency structures and is robust to affordance-affecting stochasticity and the amount of symbolic information provided,” said lead author Robby Costales, a USC computer science PhD student. “We view this work as a first step towards bridging the substantial gap between logic-based algorithms that are too rigid for practical use, and more flexible approaches that are intractable in complex environments.”

“Towards a Unified View of Parameter-Efficient Transfer Learning” Junxian He, Chunting Zhou, Xuezhe Ma, Taylor Berg-Kirkpatrick, Graham Neubig

Fine-tuning large pre-trained language models on downstream tasks has become the de-facto learning paradigm in NLP. However, conventional approaches fine-tune all the parameters of the pre-trained model, which becomes prohibitive as the model size and the number of tasks grow. Recent work has proposed a variety of parameter-efficient transfer learning methods that only fine-tune a small number of (extra) parameters to attain strong performance.

While effective, the critical ingredients for success and the connections among the various methods are poorly understood. In this paper, the authors break down the design of state-of-the-art parameter-efficient transfer learning methods and present a unified framework that establishes connections between them. Specifically, the researchers re-frame them as modifications to specific hidden states in pre-trained models, and define a set of design dimensions along which different methods vary, such as the function to compute the modification and the position to apply the modification.

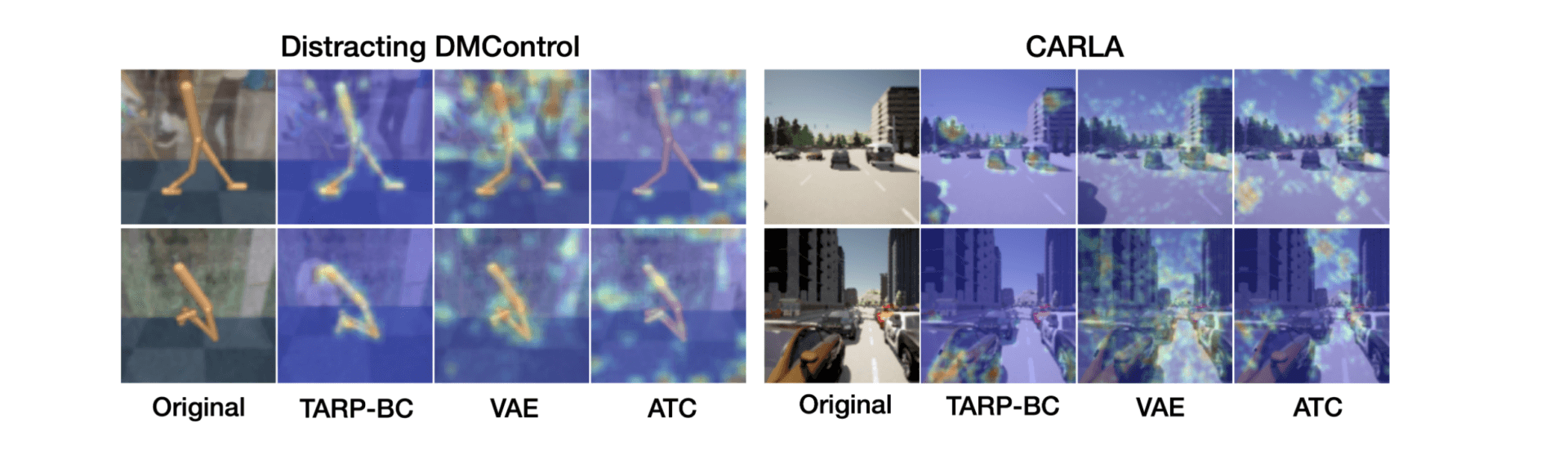

“Task-Induced Representation Learning” Jun Yamada, Karl Pertsch, Anisha Gunjal, Joseph J Lim

To efficiently train artificial agents to perform tasks in everyday life, they must learn good representations of the environment. “For example, imagine an autonomous car learning how to navigate an intersection,” said Karl Pertsch. “If, instead of a pile of pixels, the car can learn to parse its inputs into a more meaningful representation of the environment—recognize cars and their velocities, for instance—the learning task will become easier.”

But there is a key challenge when learning representations in realistic environments: the agent needs to learn which part of the scene to focus on and which to ignore. “For example, in the driving scenario, it would be impossible to represent all information in the scene, so the agent needs to learn to ignore distractors like tree branches moving in the wind, or the details of building facades on the roadside,” said Pertsch.

The core idea of this work is to leverage experience from prior tasks to learn which parts of the scene are relevant. For example, their agent can practice various driving tasks—like left and right turns—to understand the importance of modeling other cars versus the irrelevance of tree branches. Then it can reuse that representation when learning more complicated, long-horizon driving tasks. In their experiments, the team tests the approach in multiple, visually complex environments, including a realistic driving simulator. They show that the ability to represent only the important parts of the scene enables their agent to learn new tasks much more efficiently than in prior works.

“Non-Linear Operator Approximations for Initial Value Problems” Gaurav Gupta, Xiongye Xiao, Radu Balan, Paul Bogdan

Time-evolution of partial differential equations is the key to modeling several dynamical processes, and events forecasting but the operators associated with such problems are non-linear. In this paper, the researchers propose a Padé approximation-based exponential neural operator scheme for efficiently learning the map between a given initial condition and activities at a later time. The multiwavelets bases are used for space discretization. By explicitly embedding the exponential operators in the model, they reduce the training parameters and make it more data-efficient which is essential in dealing with scarce real-world datasets. The Padé exponential operator uses a recurrent structure with shared parameters to model the non-linearity compared to recent neural operators that rely on using multiple linear operator layers in succession.

“Pareto Policy Adaptation” Panagiotis Kyriakis, Jyotirmoy Deshmukh, Paul Bogdan

The researchers present a policy gradient method for Multi-Objective Reinforcement Learning under unknown, linear preferences. By enforcing Pareto stationarity, a first-order condition for Pareto optimality, they are able to design a simple policy gradient algorithm that approximates the Pareto front and infers the unknown preferences. Their method relies on a projected gradient descent solver that identifies common ascent directions for all objectives. Leveraging the solution of that solver, they introduce Pareto Policy Adaptation (PPA), a loss function that adapts the policy to be optimal with respect to any distribution over preferences.

“Contextualized Scene Imagination for Generative Commonsense Reasoning” PeiFeng Wang, Jonathan Zamora, Junfeng Liu, Filip Ilievski, Muhao Chen, Xiang Ren

Humans use natural language to compose common concepts from their environment into plausible, day-to-day scene descriptions. However, such generative commonsense reasoning (GCSR) skills are lacking in state-of-the-art text generation methods. In this work, the researchers pull a variety of image and text resources to build an “imagination” module that helps generate an abstractive description of daily life scenes.

Descriptive sentences about arbitrary concepts generated by neural text generation models are often grammatically fluent but may not correspond to human common sense, largely due to their lack of mechanisms to capture concept relations, identify implicit concepts, and perform generalizable reasoning about unseen concept compositions. As such, the researchers propose an Imagine-and-Verbalize (I\&V) method, which learns to imagine a relational scene knowledge graph (SKG) with relations between the input concepts, and leverages the SKG as a constraint when generating a plausible scene description.

“Improving Mutual Information Estimation with Annealed and Energy-Based Bounds” Rob Brekelmans, Sicong Huang, Marzyeh Ghassemi, Greg Ver Steeg, Roger Baker Grosse, Alireza Makhzani

Mutual information (MI) is a fundamental quantity in information theory and machine learning. However, direct estimation of mutual information is intractable, even if the true joint probability density for the variables of interest is known, as it involves estimating a potentially high-dimensional log partition function. In this work, the researchers view mutual information estimation from the perspective of importance sampling.

Since naive importance sampling with the marginal distribution as a proposal requires exponential sample complexity in the true mutual information, the authors outline several improved proposals which assume additional density information is available. In settings where the full joint distribution is available, they propose Multi-Sample Annealed Importance Sampling (AIS) bounds on mutual information, which they demonstrate can tightly estimate large values of MI in their experiments.

Published on April 29th, 2022

Last updated on May 24th, 2022