“Both Chloe and I had autistic friends in high school, and we thought that creating a product that could interpret emotions as vibrations could really help them,” Shannon Brownlee says. (Illustration/Weitong Mai)

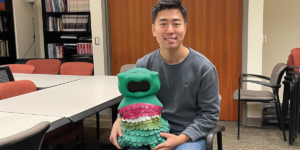

Shannon Brownlee ’22 is excited.

It’s apparent in the computer science and quantitative biology double major’s voice as she talks about Valence Vibrations, the startup she co-founded.

But detecting emotions during conversation isn’t a skill that comes easily to everyone — particularly autistic and other neurodivergent people. That’s where Valence Vibrations comes in.

The neurotech company has developed an application that translates vocal pitch patterns into discrete vibrations on a haptic wristband. Those vibrations can help the wearer recognize the emotions of someone speaking in real time by eliminating the sensory confusion of deciphering vocal pitch, spoken context, facial expression and body posturing all at once.

“My hope is that this device helps people be more comfortable in daily life and allows them to foster social relationships that can, for many people, be difficult,” says Brownlee, who co-founded Valence with Chloe Duckworth ’22, a computational neuroscience major at the USC Dornsife College of Letters, Arts and Sciences. They met as first-year students living in dorms across from each other.

Read the full story in the Trojan Family Magazine >>

Published on September 21st, 2022

Last updated on September 28th, 2022