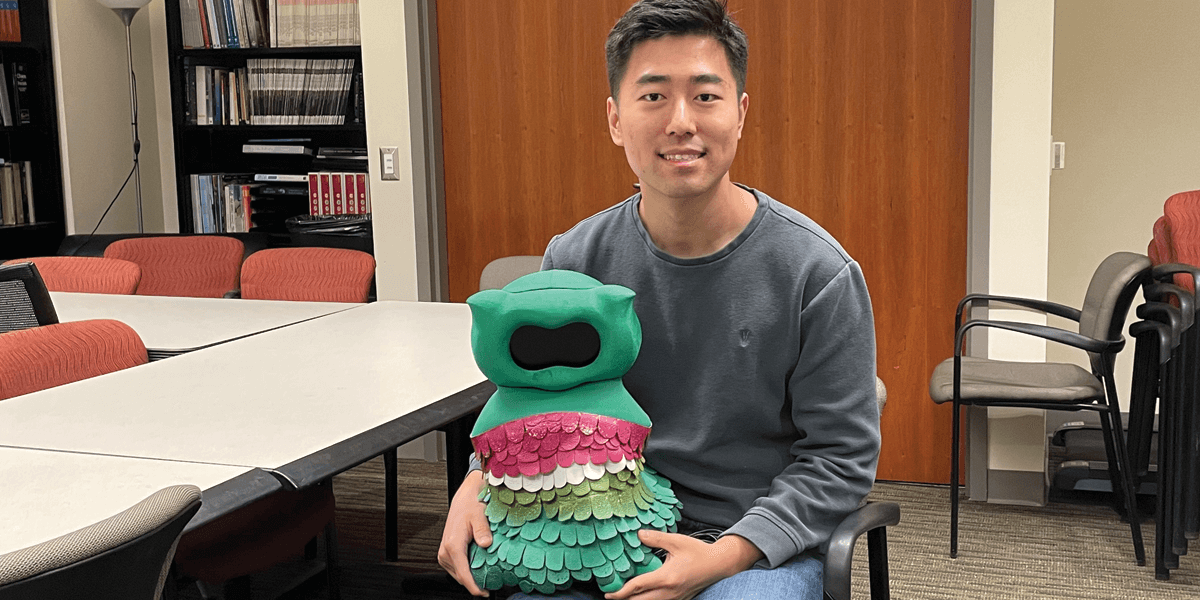

Lead author Zhonghao Shi, a computer science Ph.D. student, and Kiwi, a robot tutor that is used for working with children with autism spectrum disorder. Photo/Anna Hsu.

Around 1.5 million children in the U.S. have autism spectrum disorder (ASD), a neurological and developmental disorder that affects how people communicate, learn and behave. While there is no known single cause or cure for ASD, research suggests that socially assistive robots (SAR) can help children with autism develop and retain new skills.

This is most effective if the robot can react to each child’s individual behavior. But this is challenging for most existing robotic systems, especially for people with ASD, where symptoms and severity can vary across individuals, and even for a given person over time.

In a first-of-its-kind study published in ACM Transactions on Human-Robot Interaction, USC researchers have analyzed and modeled the cognitive-affective states of children with autism as they interact with socially assistive robots in the home. The goal: to design robot tutors that are more sensitive to the emotions and behaviors of their users.

Providing more tailored feedback

Cognitive-affective states—including emotions, attention, memory and reasoning—are processes that occur in the mind that make people feel a certain way or help them make decisions.

The researchers developed AI models to recognize different states—such as confusion, frustration or excitement—in specific children, providing more personalized feedback tailored towards the child’s individual needs during the learning process.

“With this capability, if we recognize the child is confused, we may provide a hint or lower the difficulty level of the learning material,” said lead author Zhonghao Shi, a computer science Ph.D. student advised by Professor Maja Matarić in USC’s Interaction Lab.

“However, if the child is frustrated, more encouragement or a break may be more beneficial in this setting. The capability of recognizing different states accurately allows us to provide more tailored feedback.”

The model could help researchers design better socially assistive robots and identify factors that contribute to successful social interactions and learning experiences between robots and children with diverse needs.

“I feel like the current technology is failing people with ASD,” said Shi. “We want to design more capable models to help children with autism get the support they need.”

“We want to design more capable models to help children with autism get the support they need.” Zhonghao Shi

Describing emotions

The researchers used data from a 2017 USC study of socially assistive robots placed in the homes of 17 children with autism for 30 days. The study found that by the end of the month, all the children had improved their math skills, while 92% had also improved their social skills.

In addition, the robot autonomously detected the child’s engagement— the extent to which a person is interested or involved in an activity or interaction—with 90% accuracy.

In analyzing the data, the researchers noticed something else: over time, the children would pay less attention to the robot and take longer to respond. In other words, the therapy became less useful the longer the robot was in the home.

“Usually, this kind of study is conducted in a lab, so the kids pay extra attention,” said Shi. “But when the robot is in the home, and kids are playing with it for a long period of time, they start to feel like it’s not novel anymore.”

What’s more, the robot was unable to reengage the children because it lacked the technical capabilities to detect when a child was bored, confused or frustrated.

To address this issue, the research team used the video captured by the in-home robots to annotate arousal and valence—two dimensions often used to describe an emotion—as the children interacted with the robots.

Arousal refers to the excitement a person is experiencing, while valence refers to the positivity or negativity of the emotional state. Many emotions—from joy to anger to fear—can be directly analyzed from arousal and valence, helping researchers better understand the quality of the child’s engagement with the robot.

The goal was to imbue future iterations of the robot with the ability to perceive whether a child is engaged and excited, or frustrated and losing interest, so it can provide more helpful feedback.

Personalized models

According to Shi, researchers would typically design a generic machine learning model to recognize arousal or valence. But children convey emotions in unique ways, especially children with autism.

For instance, people with autism often experience alexithymia — difficulty in identifying and expressing emotions. Shi realized that a generic model would not work to ensure every child would become re-engaged.

“It’s very possible that a generic model will not work well on all or even most children,” said Shi. “We should be able to design a personalized model for the specific child’s emotions, like cognitive and affective behaviors.”

In this case, the children need to be fully engaged so this method of therapy can successfully improve their reasoning and retention skills. Shi tackled this by training the model on data from both the child in question and other children in the study.

“Every time we finished testing with a child, we move the model to training. Then we train the model with both data from other children and data from the test child,” Shi said.

The personalized model has the benefits of an individual model, but also the sheer amount of data from every other participant.

However, Shi needed to strike a balance between how much weight was placed between the two data sets. Models work well on large sets of data, and overreliance on a single data set takes away the benefit of training the model on many children.

So, he applied a machine learning technique called supervised domain adaptation to better trade off between data from other children and data from a specific child.

“For some children, it is better to focus on the personal part of the data because their behaviors are unique, but for other children we should focus more on the generic parts,” said Shi. “If we focused on the personal part too much, it would be overfitting to the personal data.”

Bringing humans and technology together

Through his research, Shi found that in most of the test sessions, the children achieved improved cognitive and emotional learning gains in the personalized model over the generic model.

In addition to improving SAR, in the future, Shi wants to design even more capable models that can help therapists working with children with autism correct unwanted behaviors such as echolalia, or repetition of speech.

Currently, it’s difficult for a therapist to note every unwanted behavior during a session, since most of their direct attention is focused on the child.

“Instead of human annotation, the model could have drafted an annotation, and then the therapist can quickly review it after the session,” Shi said. “It could even be a feedback report, not only to the therapist, but also the parents, so they also know what’s happening in therapy. That would make it easier for them to keep track of those behaviors at home.”

Shi’s vision comes down to a single goal: to bring humans and technology together for a good cause.

“At heart, I always wanted to work on technology to help people. I’m fascinated by the technical side, but I’m even more fascinated by the human side,” Shi said. “The Interaction Lab is perfect because instead of only focusing on how to improve models, we want to design robots to help not only children with autism, but all different kinds of populations, like older adults, infants, and college students with ADHD.”

Published on February 13th, 2023

Last updated on February 13th, 2023