The USC Viterbi Robotics Open House is back!

After a four-year hiatus due to the pandemic, 180 middle and high school students were welcomed back to USC on April 14 by Katherine Guevara, the assistant director for the K-12 STEM Center, to see robots that USC Viterbi research labs are developing.

Five local Los Angeles schools were able to attend six different labs at the USC Viterbi School of Engineering, showcasing a plethora of robots from companions designed to help with physical therapy exercises to robotic dogs that carry supplies.

Alpha 1 Pro, a humanoid robot: “Shows off your moves”

Upon arrival, students were welcomed by an odd community of robots displaying varying types of mobility and skills. These robots belong to the K-12 STEM Center and are used in their programs. Students danced along with Alpha 1 Pro, a humanoid robot who had been programmed to boogie.

PLEO rb dinosaur: “eating up attention”

The PLEO rb, an autonomous robotic life form, has a sophisticated sensory system allowing it to respond when students interact.

Dynamic Robots & Control Lab: “Man’s new best friend”

Students got to experience first hand the robust control systems for the A1 robots developed by the Dynamic Robotics and Control Lab.

At the Dynamic Robotics and Control Lab, students got to experience first-hand what locomotion control of legged robots looks and feels like, as they controlled and operated the remote controls for the lab’s A1 robots.

The A1 robots are curious looking creatures that many students pointed out looked like robotic dogs. The futuristic, four-legged robots came in a small and large size and delighted the students as they loudly stomped around.

The lab develops novel control algorithms for the robots, improving upon the limited movements they are built with, making them more agile and dynamic. When handed the remotes, students quickly realized the robot’s limbs could move in almost any direction due to the presence of three independent motors in each of the four legs. The robots could run forward and backward, bend at multiple joints, and torque their limbs through rotational side-to-side movements, skittering around the room at the hands of students terrorizing their fellow classmates. The goal is for the A1 robots to be man’s new best friend, as carrying companions to humans with the larger robot being able to carry approximately 10 kg and the smaller “puppy” around 5 kg.

ICAROS Lab: “Do you need a hand with that?”

This robot is always one step ahead, predicting the needs of the user as they build, based on their behavior.

The ICAROS Lab is all about human-robot interactions and how robots can be used to help humans with tasks. Students watched as a robotic arm assisted Heramb Nemlekar, a fourth year USC Viterbi Ph.D. student, in building a model airplane, picking up tools and parts and handing them to him as he constructed the model.

However, the robot was doing more than just picking up tools and handing them to Nemleker in a pre-programmed order. It was predicting his building behavior, based on how he built a previous project. The robot knew that Nemlekar was a “lazy” builder and did not necessarily construct things in the most efficient way but for his own convenience. Watching via a birds-eye camera, the robot knew when a task was completed and predicted what component or tool was needed next, learning to work with the builder as they went and adapting its own process.

Students were able to operate the arm via a joystick to understand the mechanics of how the six joints could rotate to allow for 360-degree movement and allow for objects to be picked up from all orientations and present them to the student. One student asked if these robots had the potential to be adapted to help people with disabilities perform everyday tasks. Nemlekar pointed behind her to where a similar robot sat holding a comb. The robot was designed to assist people with neuromuscular disorders brush their hair — she had nailed the idea right on the nose!

Interaction Lab: “Daily mindfulness”

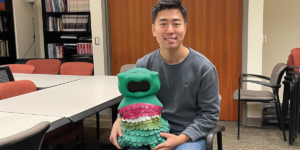

Students were led through a meditation exercise by this hoppy robot.

The robots of Maja Matarić’s Interaction Lab are all designed to aid with social needs. Students practiced a mindfulness breathing activity from Blossom — a “bunny-like” robot with a soft, handcrafted wool exterior — whose soothing voice brings meditation and moments of calm to the home.

Lab members explained the purpose was to augment the work of therapists through daily activities, and there is a necessity to having a physical body in the room, opposed to a mindfulness app, to provide a presence in the room that makes the experience more meaningful. Social human-robot interactions are dependent on closed feedback loops where the robot interprets a human action and responds to trigger another reaction. This is facilitated by a software the lab developed that identifies the human’s face and uses computer vision software to interpret their emotions allowing for an informed response from the robot.

The star of the show, however, was a robot named QT. QT was designed to help patients with cerebral palsy practice physical therapy movements in turning their wrists. QT played a number game with students where the robot tried to guess a number between 1 and 10 that the students chose. The robot asks yes or no questions about the number, and students respond with a thumbs up or thumbs down, the rotational movement of the wrist being the target of the human robot interaction. On a separate screen, students could see how the software tracked the knuckles in their hand to determine the orientation of their thumb, encouraging and congratulating them for a successful orientation.

GLAMOR Lab: “Turn right at the chair”

High level tasks are broken down into steps that require less data to inform this robot’s movements.

The Grounding Language in Actions, Multimodal Observations and Robots or GLAMOR Lab’s research focuses on developing natural language processing for robots, helping robots process words to understand their associations and meanings.

Here, the students experienced a small, Roomba like vehicle that drove itself based on written instructions telling it to turn right when it encountered a chair. The language-mapped trajectories of the robot’s movements developed by the lab focus on breaking down high level instructions to step by step processes that require far less data to inform the robots actions.

One student wondered how the moving robot knew where the chair was. A student researcher explained that the robot uses a camera and pixels to determine where objects are. If the robot hesitated while driving, the students could control the robot and orient them towards the right, giving them a hint at what the written task for the robot was asking them to do. The high-level motivation for the lab is to connect language to robot perception and action — lifelong learning through interaction. One application is creating robots that are better equipped to assist people with disabilities and their caregivers.

Published on April 21st, 2023

Last updated on April 21st, 2023