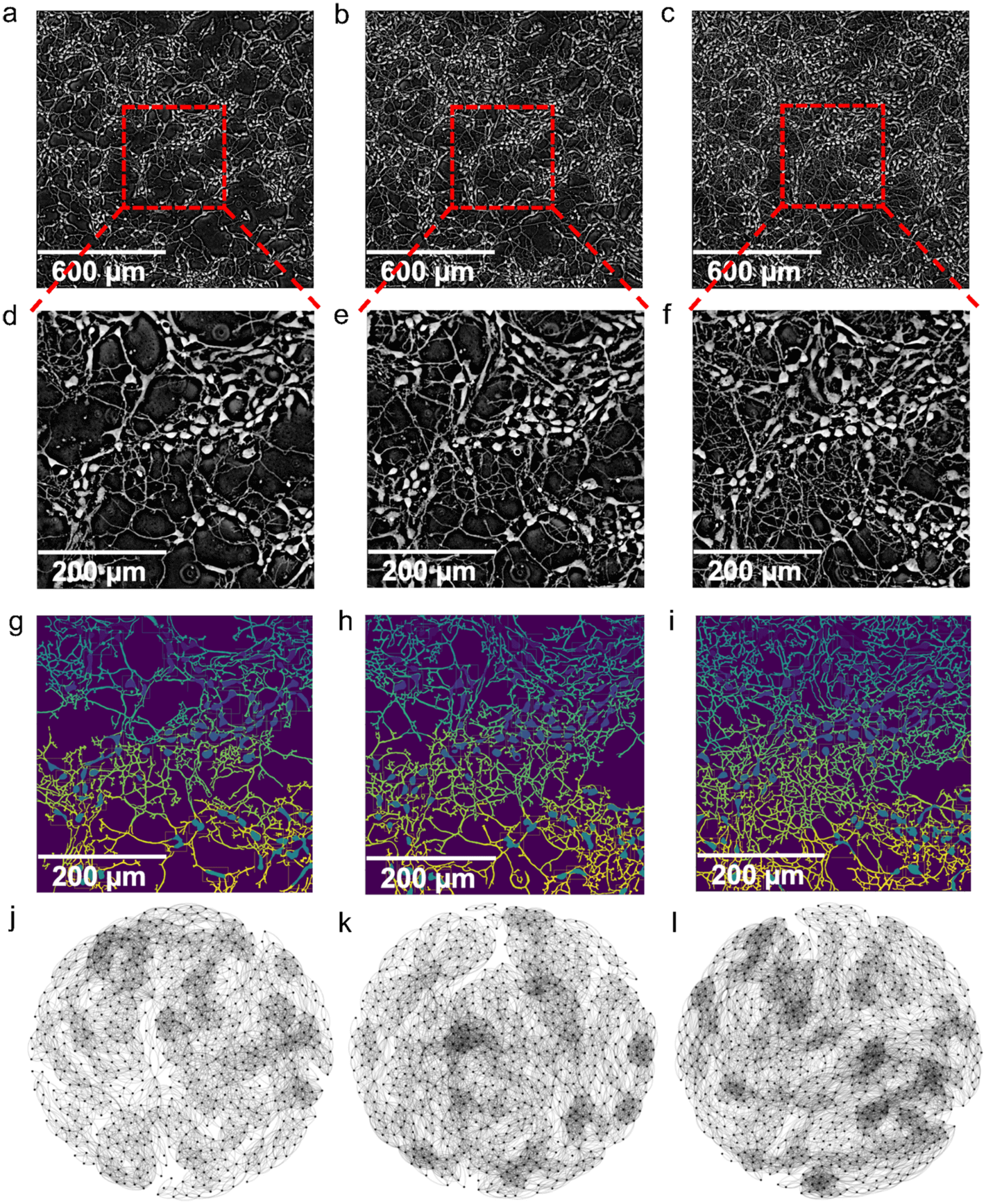

Layouts for neuronal culture networks at three representative time points. Courtesy of Cyber Physical Systems Group

For decades artificial intelligence (AI) researchers have turned to the brain for inspiration but the mechanisms by which neurons create or suppress connections, such as those responsible for memory, imagination and planning, and how these influence learning, cognition and creative behavior remains elusive.

USC Viterbi Professor Paul Bogdan and his Cyber Physical Systems Group, and University of Illinois at Urbana-Champaign collaborators, have brought us one step closer to unlocking these mysteries. Their findings, published in Scientific Reports, help us answer some fundamental questions about how information deep in the brain flows from one network to another and how these network clusters self-optimize over time.

In their paper “Network Science Characteristics of Brain-Derived Neuronal Cultures Deciphered From Quantitative Phase Imaging Data,” Bogdan and his team examined the structure and evolution of neural networks in the brains of mice and rats. It is the first study to observe this self-optimization phenomenon in in vitro neuronal networks.

“We observed that the brain’s networks have an extraordinary capacity to minimize latency, maximize throughput and maximize robustness.” said Bogdan who holds the Jack Munushian Early Career Chair at the Ming Hsieh Department of Electrical Engineering. “This means that neural networks negotiate with each other and connect to each other in a way that rapidly enhances network performance.”

To Bogdan’s surprise, none of the classical mathematical models employed by neuroscience were able to accurately replicate this phenomenon. Using multifractal analysis and a novel imaging technique called quantitative phase imagining (QPI) developed by Gabriel Popescu, a professor of electrical and computer engineering at the University of Illinois at Urbana-Champaign, a co-author on the study, the research team was able to model and analyze this phenomenon with high accuracy.

“Having this level of accuracy can give us a clearer picture of the inner workings of biological brains and how we can potentially replicate those in artificial brains,” Bogdan said.

As humans we have the ability to learn new tasks without forgetting old ones. Artificial neural networks, however, suffer from what is known as the problem of catastrophic forgetting. We see this when we try to teach a robot two successive tasks such as climbing stairs and then turning off the light.

The robot may overwrite the configuration that allowed it to climb the stairs as it shifts toward the optimal state for performing the second task, turning off the light. This happens because deep learning systems rely on massive amounts of training data to master the simplest of tasks.

If we could replicate how the biological brain enables continual learning or our cognitive ability for inductive inference, Bogdan believes, we would be able to teach A.I. multiple tasks without an increase in network capacity.

Beyond teaching A.I. new tricks, the findings of this research could have an immediate impact on the early detection of brain tumors, according to co-author Chenzhong Yin, a Ph.D. student in Bogdan’s Cyber Physical Systems Group who along with fellow Ph.D. students Xiongye Xiao and Valeriu Balaban developed the algorithm and code that allowed the team to perform its analysis.

“Cancer spreads in small groups of cells and cannot be detected by FMRI or other scanning techniques until it’s too late,” said Yin. “But with this method we can train A.I. to detect and even predict diseases early by monitoring and discovering abnormal interactions between neurons.”

To achieve this, the researchers are now seeking to perfect their algorithms and imaging tools for use in monitoring these complex neuronal networks live inside a living brain.

“By placing an imaging device on the brain of a living animal, we can also monitor and observe things like neural networks growing and shrinking, how memory and cognition form, if a drug is effective and ultimately how learning happens. We can then begin to design better artificial neural networks that, like the brain, would have the ability to self-optimize.”

The research was co-authored by: Chenzhong Yin, Xiongye Xiao, Valeriu Balaban, Mikhail E Kandel, Young Jae Lee, Gabriel Popescu, and Paul Bogdan. It was supported by the National Science Foundation (NSF), and the Defense Advanced Research Projects Agency (DARPA).

Published on September 28th, 2020

Last updated on May 16th, 2024