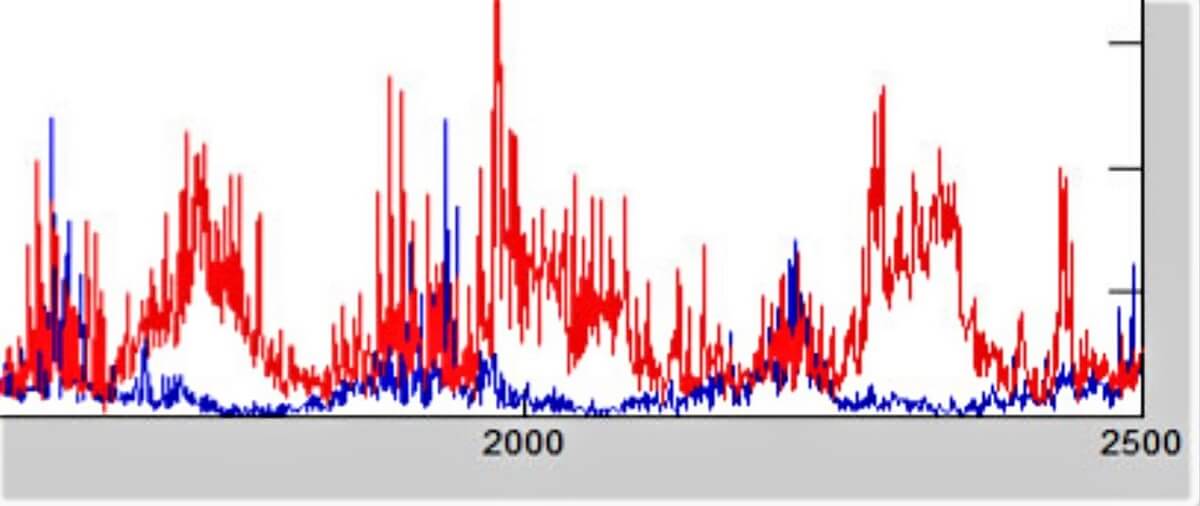

A weather sensor graph

Craig Knoblock is best known for research on a large scale: semantic alignment of data in the widely used open-source tool called Karma, geospatial mapping techniques used by the Army in Iraq, collaboration on a commercial web data extraction platform, and recent, groundbreaking progress on international human trafficking with Pedro Szekely.

In March this year, however, Knoblock presented work that appears to speak to a much smaller dimension: weather and water sensors.

Appears to, because as Knoblock made clear, improving sensor network resilience and scope builds directly on his previous work. Knoblock and his team sought to recreate sensor data after one unit fails or is swapped out for a more capable version. The challenge lies in inferring the missing data from information elsewhere in the network. The solution: building semantic descriptions of the underlying data, an approach similar to those Knoblock has used for geospatial mapping and human trafficking.

About 35 people attended the talk, part of ISI’s “What’s Going On” series, in Marina del Rey, California and Arlington, Virginia. “What’s Going On” expands researchers’ knowledge of efforts underway in, and fosters collaboration between, other Institute divisions and locations. Previous speakers have included Kristina Lerman on computational analysis of social media data, Jelena Mirkovic on life-experience passwords, Greg Ver Steeg on unsupervised learning and Andrew Schmidt on heterogeneous computing.

Knoblock’s project, funded by the Defense Advanced Research Projects Agency, seeks to build survivable software that can interact reliably with sensors – particularly those for underwater vehicles. At present, replacing one sensor with another runs the risk of receiving similar data in a different format, changing the underlying database structure or being unable to exploit fresh information types generated by the newer model. No standard formats exist for incoming data, and software doesn’t adapt automatically, so must be reconfigured manually.

Craig Knoblock

What’s more, sensors are swapped out while they’re offline, so there may not be any overlap in data received. Changing software to match manufacturers’ specifications for new sensors is a time-consuming, costly process that often is insufficient to reconstruct signals accurately. Numeric data looks very similar across functions, so deciphering what each sensor value represents is difficult based on numbers alone. For instance, humidity and precipitation odds both show up in the zero-to-100 range.

The much more promising approach involved treating data as a mapping problem. Large data quantities could be streamlined by building semantic descriptions, aligning those results across sensors, then reconstructing missing data based on other nearby sensors. It’s somewhat akin to a neighborhood whodunit solved by people sharing their recollections of who saw what to reconstruct the crime.

Because Knoblock didn’t have access to actual underwater data, the team opted to use weather as a proxy for underwater conditions, learning the relationship of factors like dew point and humidity. Data came from about 50 weather stations provided by Weather Underground, a network of enthusiasts who stream information from personal equipment worldwide. To simulate a failed or replaced sensor in a weather station, the team replaced data from a given sensor with the same type from a nearby weather station.

Missing signals were reconstructed based on other available sensors’ data, such as temperature, dew point, humidity, wind speed and wind gusts. The other sensors then were trained with a machine learning algorithm, which enabled the team to recreate the missing sensor, even though there was no overlap between a previous sensor/weather station and the new one.

Semantic labeling techniques were used to narrow search parameters, which streamlined processing. To reduce the impact of transient factors like wind gusts, reconstructed signals were matched with historical patterns. Preliminary results for the first of three 16-month phases: accuracy ranging from 85 to 100 percent for temperature and other factors, along with dramatic gains over baseline data.

In the next stage, analysis will be done with weather and simulated underwater data. Knoblock aims to detect sensor failure automatically, train the system to handle different data formats, and exploit new sensors’ additional capabilities – even without the system knowing what the new data represents.

Among the applications: assisting geoscientists with ocean data collection by enabling them to simulate returning to an exact location at the same time of year. Vital medical data could be reconstructed when devices fail to register signals, and data-intensive cellphone functions such as GPS could be simulated at lower cost. ISI’s Matthew French also pointed out that interlaid atmospheric sensors could enrich space knowledge – demonstrating the cross-disciplinary thrust of “What’s Going On” really is going on.

Published on April 18th, 2017

Last updated on June 3rd, 2021