With innovations in machine learning and AI occurring at faster speeds than ever before, the annual Conference on Neural Information Processing Systems (NeurIPS) brings together researchers and engineers to share new discoveries and collaborate on ideas to propel artificial intelligence into the future.

In total, 30 papers co-authored by USC-affiliated researchers have been selected for presentation at this week’s 2021 event (Dec. 6-14), showcasing novel work that could ultimately revolutionize industries and benefit humanity. NeurIPS is one the top conferences in machine learning and artificial intelligence research in the world, with an overall acceptance rate of 26%.

Three USC papers were selected for oral presentations, with an acceptance rate of less than 1%. Five USC papers were selected for spotlights, with an acceptance rate of 3%.

NeurIPS 2021: Presentation Spotlight

Matt Fontaine, USC Computer Science Ph.D. Student

In a paper selected for the prestigious oral presentation, lead author Matt Fontaine and his supervisor Assistant Professor Stefanos Nikolaidis propose the first differentiable version of Quality Diversity (QD) optimization. Applications could include image generation, damage recovery for robots, and video game development.

Q&A with Matt Fontaine

In a few sentences, can you explain what is this research is about? And, do tell: how does Beyoncé figure in the study?

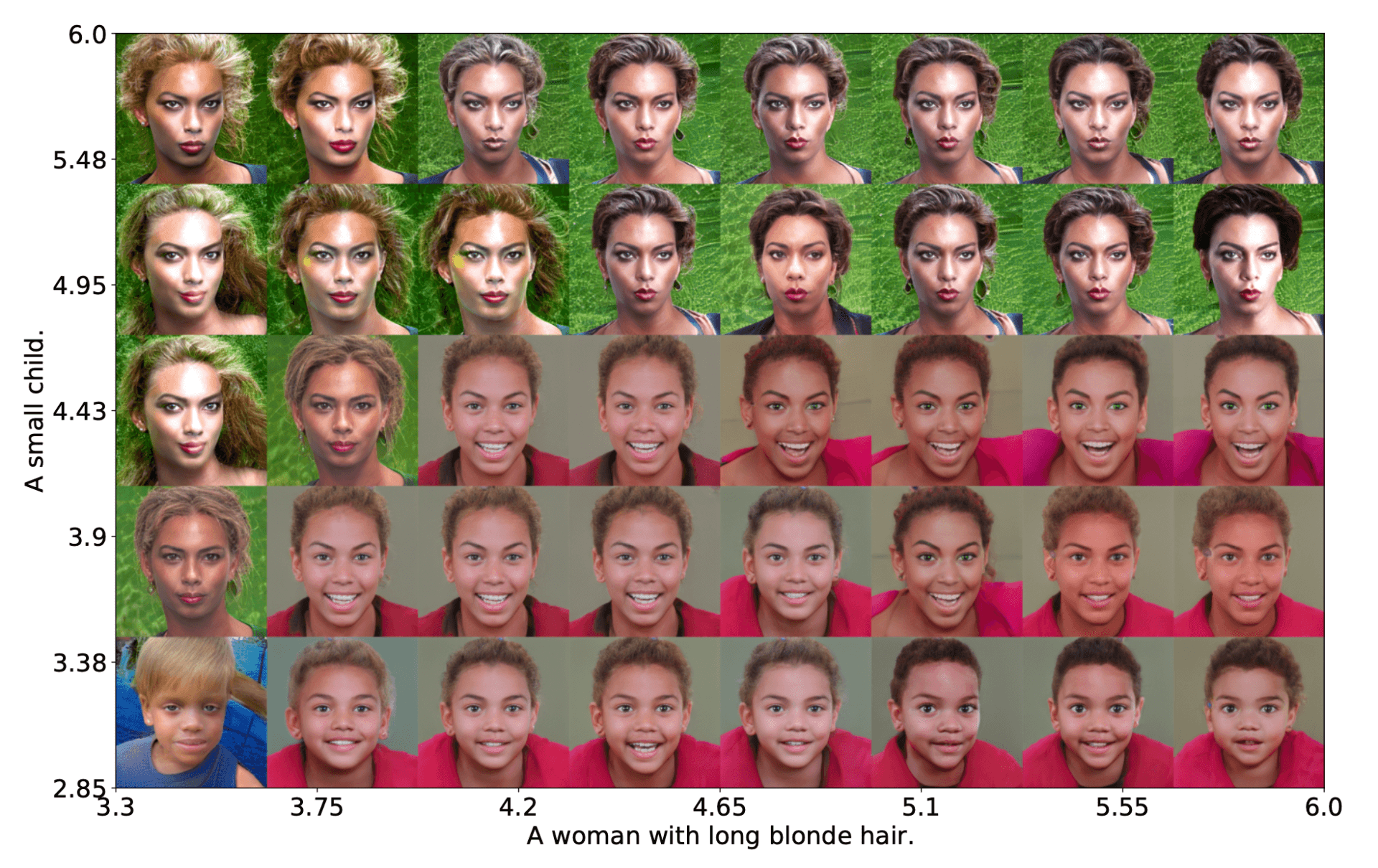

Many deep learning problems boil the problem down to a single objective. For example, if you were to generate a photo of a celebrity—say Beyoncé—then your objective would be to make an image as close to that prompt as possible. However, did you want an older or younger Beyoncé? The deep learning approach would require you to pick a specific age and add that to your objective.

In contrast, the evolutionary computation field has a problem called a "quality diversity problem." In addition to the objective, you can specify measures such as age. A quality diversity (QD) algorithm can then take these inputs and discover a collection of photos—instead of a single photo—of Beyoncé for a range of ages, or any other measures that you wish to have diversity for.

Above: a collection of AI-generated images of "Beyonce" that vary with respect to age and hair length from a single run of the algorithm.

In addition to image generation, what are some of the applications of QD algorithms?

QD algorithms have seen applications in damage recovery for robots, generating diverse collections of video game levels that are playable, and designing aerodynamic shapes—think airplane wings or cars.

Our earlier work shows how QD algorithms can generate collections of diverse failure scenarios for human-robot robot interaction and generate a diverse collection of environments that cause difficulty for a human-robot team.

What are some of the limitations of current QD algorithms?

One advantage of single objectives in deep learning is that you can take the gradient of the objective to make the search efficient. The gradient is like a compass that points towards the direction in which you need to go to improve your objective. Current QD algorithms only approximate this compass through trial and error, making the algorithms inefficient. Leveraging exact gradients in quality diversity optimization has been an open problem.

What's your proposed solution?

In our paper, we show how to use the "gradient compass" for the objective (i.e. Beyoncé), but also a "gradient compass" for the measures (i.e. age) that allows us to efficiently increase or decrease the age of the person in the generated photo. Our approach allows us to bring the ideas of quality diversity from the evolutionary computation community and make them efficient in deep learning contexts.

Why are you excited about this research?

Most of machine learning right now uses gradients and the gradient descent algorithm, which means the potential for impacting the ML field is huge. In fact, nearly every paper at NeurIPS uses gradient descent—the name for "gradient compass" guided search—in some way.

We expect our approach to have broad impacts across many different subfields of deep learning, which is really exciting. Our approach opens up many potential applications anywhere in machine learning; we expect the applications for quality diversity to broaden greatly now that we have differentiable quality diversity algorithms.

USC Papers:

Oral presentations:

MAUVE: Measuring the Gap Between Neural Text and Human Text using Divergence Frontiers

Krishna Pillutla (University of Washington), Swabha Swayamdipta (Allen Institute for AI/USC), Rowan Zellers (University of Washington), John Thickstun (University of Washington), Sean Welleck (University of Washington), Yejin Choi (University of Washington), Zaid Harchaoui (University of Washington)

Tiancheng Jin (University of Southern California) · Longbo Huang (IIIS, Tsinghua Univeristy) · Haipeng Luo (University of Southern California)

Differentiable Quality Diversity

Matthew Fontaine (University of Southern California), Stefanos Nikolaidis (University of Southern California)

Spotlight presentations:

Multiwavelet-based Operator Learning for Differential Equations

Gaurav Gupta (University of Southern California), Xiongye Xiao (University of Southern California), Paul Bogdan (University of Southern California)

Uniform Sampling over Episode Difficulty

Sébastien Arnold (University of Southern California), Guneet S Dhillon (Amazon Web Services), Avinash Ravichandran (AWS), Stefano Soatto (UCLA)

MEST: Accurate and Fast Memory-Economic Sparse Training Framework on the Edge

Geng Yuan (Northeastern University), Xiaolong Ma (Northeastern University), Wei Niu (The College of William and Mary), Zhengang Li (Northeastern University), Zhenglun Kong (Northeastern University), Ning Liu (Midea), Yifan Gong (Northeastern University), Zheng Zhan (Northeastern University), Chaoyang He (University of Southern California), Qing Jin (Northeastern University), Siyue Wang (Google), Minghai Qin (WDC Research), Bin Ren (Department of Computer Science, College of William and Mary), Yanzhi Wang (Northeastern University), Sijia Liu (Michigan State University), Xue Lin (Northeastern University)

Refining Language Models with Compositional Explanations

Huihan Yao (Peking University), Ying Chen (Tsinghua University) · Qinyuan Ye (University of Southern California), Xisen Jin (University of Southern California) , Xiang Ren (University of Southern California)

Conformal Prediction using Conditional Histograms

Matteo Sesia (University of Southern California), Yaniv Romano (Stanford University)

Poster presentations:

Analyzing the Confidentiality of Undistillable Teachers in Knowledge Distillation

Souvik Kundu (University of Southern California), Qirui Sun (University of Southern California), Yao Fu (University of Southern California), Massoud Pedram (University of Southern California), Peter Beerel (University of Southern California)

Lifelong Domain Adaptation via Consolidated Internal Distribution

Mohammad Rostami (University of Southern California)

Gradient-based Editing of Memory Examples for Online Task-free Continual Learning

Xisen Jin (University of Southern California), Arka Sadhu (University of Southern California), Junyi Du (University of Southern California), Xiang Ren (University of Southern California)

Learning to Synthesize Programs as Interpretable and Generalizable Policies

Dweep Kumarbhai Trivedi (University of Southern California), Jesse Zhang (UC Berkeley), Shao-Hua Sun (University of Southern California), Joseph Lim (University of Southern California)

Generalizable Imitation Learning from Observation via Inferring Goal Proximity

Youngwoon Lee (University of Southern California), Andrew Szot (Georgia Institute of Technology), Shao-Hua Sun (University of Southern California), Joseph Lim (University of Southern California)

SalKG: Learning From Knowledge Graph Explanations for Commonsense Reasoning

Aaron Chan (University of Southern California), Jiashu Xu (University of Southern California), Boyuan Long (University of Southern California), Soumya Sanyal (IISc, Bangalore), Tanishq Gupta (Indian Institute of Technology Delhi), Xiang Ren (University of Southern California)

Collaborative Uncertainty in Multi-Agent Trajectory Forecasting

Bohan Tang (University of Oxford) · Yiqi Zhong (University of Southern California) · Ulrich Neumann (USC) · Gang Wang (Beijing Institute of Technology) · Siheng Chen (MERL) · Ya Zhang (Cooperative Medianet Innovation Center, Shang hai Jiao Tong University)

Hamiltonian Dynamics with Non-Newtonian Momentum for Rapid Sampling

Greg Ver Steeg (USC Information Sciences Institute) · Aram Galstyan (USC Information Sciences Institute)

Dominik Stöger (Katholische Universität Eichstätt-Ingolstadt), Mahdi Soltanolkotabi (University of Southern California)

Implicit SVD for Graph Representation Learning

Sami A Abu-El-Haija (USC Information Sciences Institute), Hesham Mostafa (Intel Corporation), Marcel Nassar (Intel), Valentino Crespi (Information Sciences Institute - USC), Greg Ver Steeg (USC Information Sciences Institute), Aram Galstyan (USC Information Sciences Institute)

VigDet: Knowledge Informed Neural Temporal Point Process for Coordination Detection on Social Media

Yizhou Zhang (University of Southern California), Karishma Sharma (University of Southern California), Yan Liu (University of Southern California)

Implicit Finite-Horizon Approximation and Efficient Optimal Algorithms for Stochastic Shortest Path

Liyu Chen (University of Southern California), Mehdi Jafarnia-Jahromi (University of Southern California), Rahul Jain (University of Southern California), Haipeng Luo (University of Southern California)

Fairness in Ranking under Uncertainty

Ashudeep Singh (Cornell University) · David Kempe (U. of Southern California) · Thorsten Joachims (Cornell)

Scalable Inference of Sparsely-changing Gaussian Markov Random Fields

Salar Fattahi (University of Michigan), Andres Gomez (University of Southern California)

Robust Allocations with Diversity Constraints

Zeyu Shen (Duke University), Lodewijk Gelauff (Stanford University), Ashish Goel (Stanford University), Aleksandra Korolova (University of Southern California), Kamesh Munagala (Duke University)

Information-theoretic generalization bounds for black-box learning algorithms

Hrayr Harutyunyan (USC Information Sciences Institute), Maxim Raginsky (University of Illinois at Urbana-Champaign), Greg Ver Steeg (USC Information Sciences Institute), Aram Galstyan (USC Information Sciences Institute)

Last-iterate Convergence in Extensive-Form Games

Chung-Wei Lee (University of Southern California), Christian Kroer (Columbia University), Haipeng Luo (University of Southern California)

Policy Optimization in Adversarial MDPs: Improved Exploration via Dilated Bonuses

Haipeng Luo (University of Southern California), Chen-Yu Wei (University of Southern California), Chung-Wei Lee (University of Southern California)

On Model Calibration for Long-Tailed Object Detection and Instance Segmentation

Tai-Yu Pan (The Ohio State University), Cheng Zhang (The Ohio State Unversity), Yandong Li (University of Central Florida), Hexiang Hu (University of Southern California), Dong Xuan (Ohio State University), Soravit Changpinyo (University of Southern California (USC), Boqing Gong (University of Central Florida), Wei-Lun Chao (Ohio State University (OSU)

Controlling Neural Networks with Rule Representations

Sungyong Seo (University of Southern California), Sercan Arik (Google), Jinsung Yoon (Google), Xiang Zhang (New York University), Kihyuk Sohn (Google), Tomas Pfister (Google)

Decoupling the Depth and Scope of Graph Neural Networks

Hanqing Zeng (University of Southern California), Muhan Zhang (Peking University), Yinglong Xia (University of Southern California), Ajitesh Srivastava (University of Southern California), Andrey Malevich (Facebook), Rajgopal Kannan (DoD HPCMP), Viktor Prasanna (University of Southern California), Long Jin (Facebook), Ren Chen (University of Southern California)

Luna: Linear Unified Nested Attention

Xuezhe Ma (Carnegie Mellon University) · Xiang Kong (Carnegie Mellon University) · Sinong Wang (Facebook AI) · Chunting Zhou (Language Technologies Institute, Carnegie Mellon University) · Jonathan May (University of Southern California) · Hao Ma (Facebook AI) · Luke Zettlemoyer (University of Washington and Facebook)

Published on December 6th, 2021

Last updated on May 16th, 2024