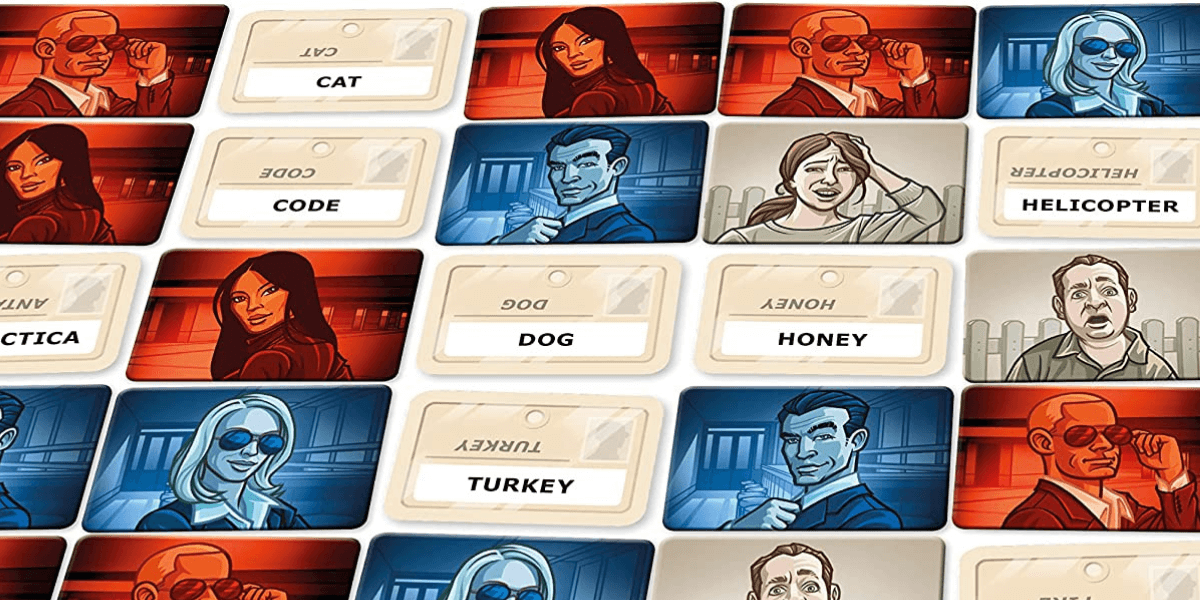

Picture courtesy of CGE Czech Games Edition Codenames Boardgame

Imagine a realm where barriers between communication and culture are nonexistent in a word game. Where humans have the ability to both intersect and predict gameplay behavior based on their opponents’ cultural backgrounds and behavior.

USC Viterbi Information Sciences Institute (ISI)’s Fred Morstatter, Research Team Lead, Omar Shaikh, a Ph.D. student at Stanford University, Caleb Ziems, a Ph.D. student at Stanford University, William Held, a Ph.D. student at Georgia Tech, Aryan J. Pariani, a Masters student at Georgia Tech, and Diyi Yang, an Assistant Professor at Stanford University are presenting a paper on Modeling Cross-Cultural Pragmatic Inference with Codenames Duet at 61st Annual Meeting of the Association for Computational Linguistics (ACL’23).

Fred Morstatter (ISI) was inspired to write this paper through an AI seminar series at ISI led by Diyi Yang (Stanford University) where there was a lot of commonality with research questions that they wanted to target – and was excited as it connected to his previous work in understanding culture from remote sensing such as Reddit, Telegram, Twitter, etc.

Codenames Duet – a word association game between multiple players that challenge each other to communicate and guess a secret code word before the opponent can do so. It challenges players to use their critical thinking skills in order to strategize the next move. Furthermore, the game incorporates a wide range of cultural elements that allow players to engage and connect with each other from different backgrounds.

There is a uniqueness to each player when it comes to playing Codenames as each comes from different backgrounds and knowledge that shapes how they interpret clues and make the next guesses. This has allowed researchers at ISI to study a research question – How does cultural background affect playstyle?

“The fact that we can predict what someone else will guess in Codenames so well based on their cultural background is very interesting and enlightening. It sheds light on how people decide how they set up clues, communicate with each other, etc.” Morstatter added.

Sociocultural Background Affects Interpersonal Communication

Barriers between culture and communication are often recurrent when individuals and groups come from different sociocultural backgrounds and native languages. Hence, pragmatic failure – miscommunication between the intended meaning of a speaker and the interpretation of the listener – was modeled in research to study the role of culture in social interactions.

Pragmatic failures can occur in various contexts such as the use of sarcasm between the speaker and the literal interpretation of the listener which may lead to confusion or offense. Thus, this research is designed to “propose a setting that captures the diversity of pragmatic inference across sociocultural backgrounds.”

Different sociocultural backgrounds go beyond how individuals communicate with one another. It changes the way social instances are perceived such as social cues in Codenames.

Fred Morstatter (ISI) comes from a background in human behavior through the lines of AI systems to understand what people are going to do, forecast behavior, and identify stereotypes and biases.

“There is no specific problem within this research that we are trying to solve. Rather, this is a study to dive deep into the nuances of human behavior and explain how cultural markers can affect decision-making. Therefore, Codenames Duet is a great starting point because it’s a sterile and clean environment to test out how these things are affecting each other.”

The Dataset

Codenames Duet is a variation of Codenames that follows a 5×5 format of 25 common words for players to guess. Each player has a distinct set of words on the board – goal, neutral, and avoid – and the objective is to guess the partner’s goal words without guessing any of the avoid words.

The researchers selected the 100 most abstract words that are more likely to bring forth diverse responses from players. The source of players came from Amazon’s Mechanical Turk – where players were given instructions on how to play the game.

“An example outlined the clue giver targets the word fall and drop, giving the hint slip. The guesser misinterprets slip as a piece of paper, guessing receipt and check.”

To model the role of sociocultural factors in pragmatic inferences, researchers gathered background information from the players to determine its sociocultural factors: demographic data (age, country of origin, whether English is the first native language), personality, and moral and political leaning. This background information was studied to collect data that will allow the research team to understand the role of sociocultural factors within the game. These factors play a critical role in individual development and functioning which heavily tie in with decision-making and critical thinking of the game.

“We found that the demographics were moderately diverse, and gender was evenly split as 53% female-identified, 47% as male, and 0% as other. The biggest personality traits based on the Big 5 Personality Test included: Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism; and there was a split among political leaning as 57% identified as liberal, 39% as conservative, and 5% as libertarian.”

Out of 794 games that were collected – there was a total of 199 wins and 595 losses. Each game averaged a total of 5.7 turns which added to 7,703 total turns across all games. This data set means that there are improvements in mirroring inferences when coming from a diverse sociocultural background. Particularly, it shows that accounting for background characteristics significantly improves model performance for tasks related to clue-giving and guessing.

“There’s a lot to be said about the interplay between culture and language – and how language is used. We only explore English here, so the language is fixed in that sense, but the cultures are not” Morstatter added.

The Future of AI Systems and Culture

AI models are more than capable of understanding cultural backgrounds through the lens of statistical data. However, there is a lack of these systems to understand culture through the lens of norms, values, and even slang.

“AI systems in general need to be better informed about cultural backgrounds or else they are going to fail or make bad predictions, or even are going to offend the people that are interacting with them; and that is true across any human-facing AI system,” Morstatter added.

With that being said, there are numerous opportunities to explore AI within the realm of understanding how cultural backgrounds can predict what humans intend to do next. Take into consideration the field of financial markets and how AI systems’ financial data predict stock prices; or the healthcare department and how AI systems can analyze patient data in order to predict disease outcomes and come up with personalized treatment plans.

“Codenames Duet is only a starting point because it is a sterile and clean environment to test out how cultural understandings are affecting each other. I hope that in the future, technicians can look at how we operationalize culture and improve upon it.”

There are endless possibilities with AI.

“I hope that policymakers learn from this paper in the sense that they like to frame AI as good or bad; Yet I don’t think that is the right way to think of it. The important question is – how can AI be made to serve people to be better? Codenames are just another way to answer that question.”

Research Beyond Codenames

Although the research presents a limited vocabulary of just 100 words, the hope is to explore other domains outside of Codenames with similar results.

Learning sociocultural associations has the potential to show others both the positive and negative implications of stereotypes. However, documenting and reducing these negative stereotypes is an important avenue for researchers.

By immersing individuals in other cultures, it opens opportunities for meaningful conversations that stimulate minds to think in a more diverse way.

“The bottom line is that I hope to work with other students to use games as a lens to understand how people interact; I do know for sure that it is going in a cool future direction.”

ACL 2023

This research paper will be presented at the 61st Annual Meeting of the Association for Computational Linguistics (ACL’23) – the premier scientific and professional society for people working on computational problems involving human language, a field often referred to as either computational linguistics or natural language processing.

The event will take place in Toronto, Canada from July 9th to July 14th, 2023.

Published on July 12th, 2023

Last updated on May 16th, 2024