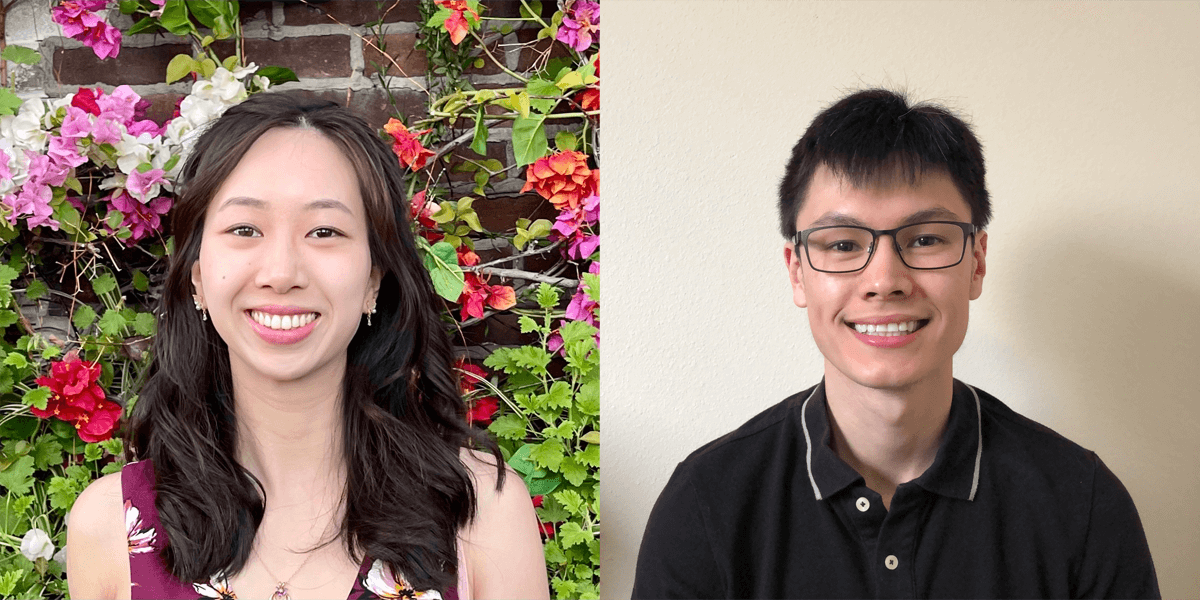

Emily Nguyen and James Flemings both received the 2023 NSF Graduate Research Fellowship Award.

Prescribed fires, also known as prescribed burns, are common in today’s volatile climate as a way minimize wildfire risk. But how do experts decide when to intentionally set vegetation on fire?

Emily Nguyen, a Ph.D. student studying computer science, received the National Science Foundation Graduate Research Fellowship Program (NSF GRFP) award for her research in deep generative models focusing on wildfire prevention.

Fellow computer science Ph.D. student, James Flemings, received the NSF GRFP award for his work addressing the privacy-utility tradeoff in machine learning.

NSF has funded more than 60,000 graduate research fellowships dating back to 1952. Fellowship awardees receive a three-year annual stipend of $37,000 and a $12,000 allowance for tuition and fees. More than 70% of students in the GRFP complete their doctorates within 11 years; 42 fellows have become Nobel laureates and at least 450 have been named members of the National Academy of Sciences.

Predicting the fuel of the flame

Nguyen was inspired to develop better prescribed burning models after seeing the catastrophic results of the 2022 Calf Canyon/Hermits Peak blaze. After two prescribed burns grew out of control from the combination of strong April winds and the drought-stricken northern New Mexico landscape, it became the largest wildfire in state history.

According to the U.S. Forest Service, which manages these intentional fires, prescribed burns are the “controlled application of fire by a team of fire experts under specified weather conditions to restore health to ecosystems that depend on fire.” However, in the case of the Calf Canyon/Hermits Peak fire, the weather conditions weren’t what the fire experts were expecting. Warmer and drier weather caused the prescribed burn to grow out of control.

Decisions to pursue a prescribed burn are often supported by weather forecasting models, especially crucial because conditions in fire-prone landscapes change quickly.

“Accurate real-time weather forecasting aids fire management professionals in adapting to changing conditions and minimizes air pollutants for nearby residents’ health and well-being,” Nguyen said. “Early wildfire detection systems will better protect public safety, decrease reparation costs and mitigate climate change-related impacts.”

Enter deep generative models. While such models are most famously associated with generative art, they are also capable of forecasting and anomaly detection, highly important methods in predicting the likelihood of certain scenarios.

However, current models are impractical for adaptation to real-world problems. “I aim to develop a practical diffusion-based generative model for time series,” Nguyen said. “This can be applied to a variety of areas such as security-based research into intrusion detection, financial forecasting, health science research for remote patient monitoring and the focus of this current research, wildfire prevention.”

After the Calf Canyon/Hermits Peak fire, many impacted New Mexico residents lost trust in fire officials’ ability to safely reduce fire risk. Building that trust back will take time, but also an assessment of what went wrong before and how improvements can be made.

“The prevention of wildfires using deep generative models would ensure the safe continuation of current natural and cultural resource management practices, which can strengthen public trust,” Nguyen said.

But that’s not all Nguyen has accomplished. She also has an upcoming research paper titled “Transferable and Interpretable Treatment Effectiveness Prediction for Ovarian Cancer via Multimodal Deep Learning” in the American Medical Informatics Association (AMIA) 2023 Annual Symposium.

“The project’s goal is to develop an AI-based tool for early identification of treatment effectiveness in ovarian cancer,” Nguyen said. “If ineffectiveness is identified prior to treatment, then patients could be offered alternative, potentially more effective treatments. This result may improve patient survival rates and well-being while also saving medical resources.”

Using data safely under wraps

Machine learning (ML) models are fueled by data, which can include private information. Take ChatGPT for example — although it is exclusively trained on information found publicly on the internet, Italian regulators temporarily banned the chatbot in April after raising privacy concerns over the data it collects for training purposes. However, ChatGPT’s strength relies on the great amounts of data it has encountered. Without the plethora of data, it would not be as accurate as it is now.

“Simply redacting or removing such sensitive information in ChatGPT’s training data is catastrophic to its performance, resulting in lower accuracy and expressiveness,” Flemings said. “This natural phenomenon is called the privacy-utility tradeoff, and my research looks to address this tradeoff by achieving a high level of privacy while mitigating the accuracy loss of ML models.”

Nearly everyone is affected by the privacy-utility tradeoff, since data is collected everywhere from the internet. Data is collected when a person shops online, watches a TV show, or even just scrolls the news. This makes data collection and privacy a key issue even beyond the world of ML researchers, who must find the balance between regulation restrictions and having enough accuracy to create useful models.

“As a user, we want the software applications we use to give us accurate recommendations,” Flemings said. “But at the same time, we also want these systems to uphold the privacy of our data. The privacy-utility tradeoff says we can’t completely have both, but my research strives to achieve a point that gives the best tradeoff for all users.”

His supervisor Murali Annavaram, Dean’s Professor of Electrical and Computer Engineering, added: “James’ work will help developers automatically deploy the optimal privacy protection scheme for a given system design constraint. This award is a great early validation of the work he is embarking on at USC and I look forward to working with him to achieve his objectives.”

Published on July 6th, 2023

Last updated on May 16th, 2024