A 2023 LA Tech Week panel discussed the impact generative AI such as ChatGPT will have on cybersecurity and fraud. Image/Pexels

Online frauds and phishing scams may seem easy to spot for the most tech-savvy of us — the misspellings, off-brand imagery and poor attempts at sounding official. You may feel confident you’d never fall prey to an email promising you a prince’s unclaimed millions.

However, with the rise of generative AI technology such as ChatGPT, Bard and Midjourney, black hats and fraudsters may now have a new set of tools to help them create sophisticated attacks and scams that are more difficult to detect and stop.

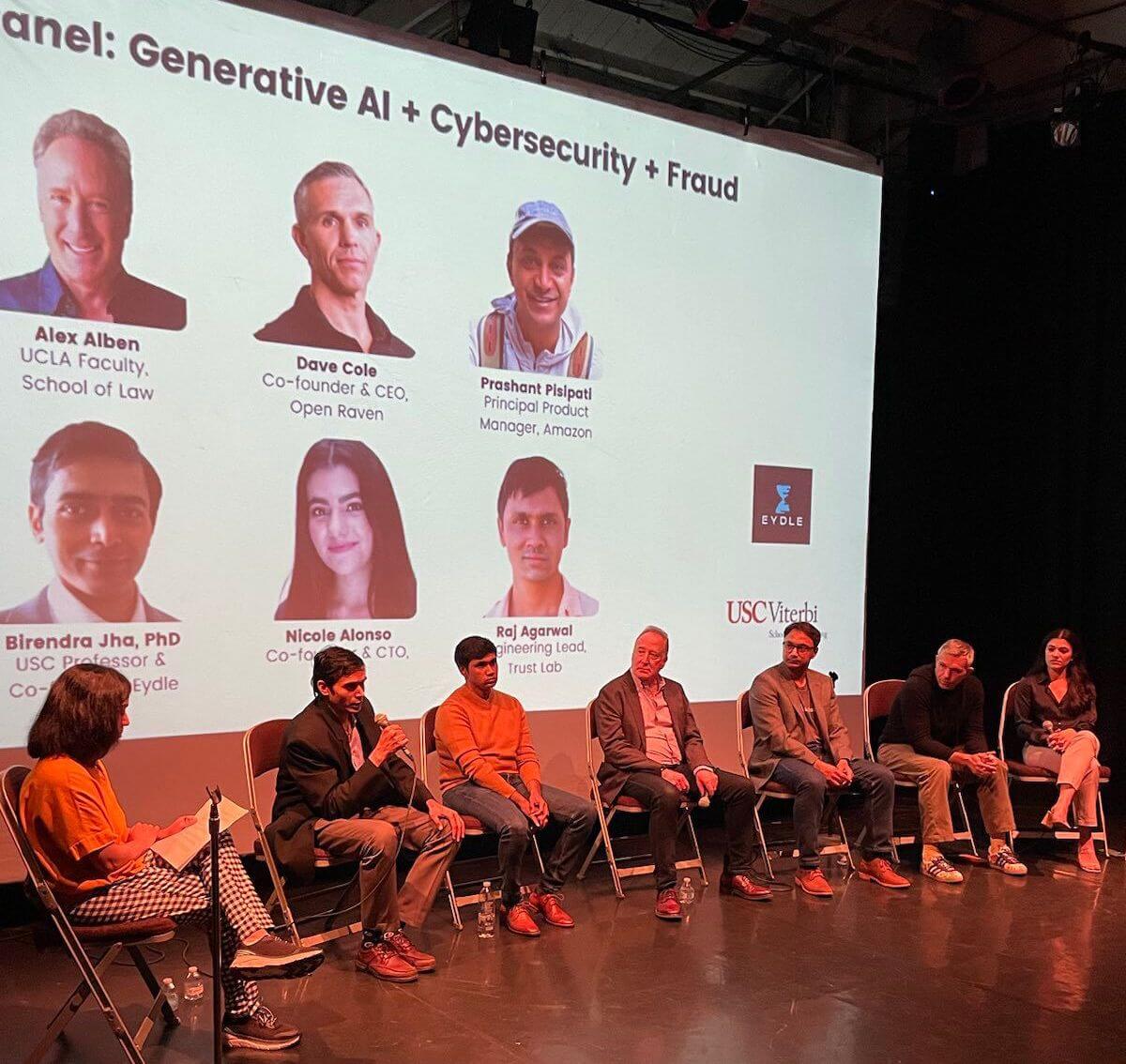

The LA Tech Week panel discussion. Image/Jeffrey Monson

A panel of experts gathered in Santa Monica on June 7 to discuss generative AI platforms and the potential impacts on cybersecurity, fraud, copyright and misinformation. The event was presented as a part of LA Tech Week 2023 and hosted by USC Viterbi-led startup Eydle. Eydle was founded by Assistant Professor in the Mork Family Department of Chemical Engineering and Materials Science Birendra Jha and cybersecurity engineer Ashwini Rao.

Generative AI has exploded in popularity in the past year, offering users of all levels of experience the ability to input prompts and instantly generate high-level text, photorealistic images, video and artwork.

But platforms that can create detailed and realistic content in a flash can also be harnessed by online scammers to create convincing phishing emails, undetectable social media impersonation accounts, or sophisticated fake imagery. Bad actors without a coding background can also more easily generate code that may be used or adapted for illegal applications.

Jha and Rao’s startup Eydle, also supported through the USC Viterbi Startup Garage, was founded to combat a rise in social media impersonations and phishing scams.

A new breed of sophisticated social media fakes

Rao said that social media platforms have seen a 1,800% growth in scams in the last five years. Until now, scam social accounts — similar to phishing emails — are often weeded out by their poor syntax and grammatical errors. But the clear text output offered by ChatGPT could potentially make that a thing of the past. The sharing of photorealistic generated images on social media can also be weaponized to fool users.

“As they say, a picture is worth a thousand words, so it’s much more dangerous when you have visual scams because they’re more realistic,” Rao said.

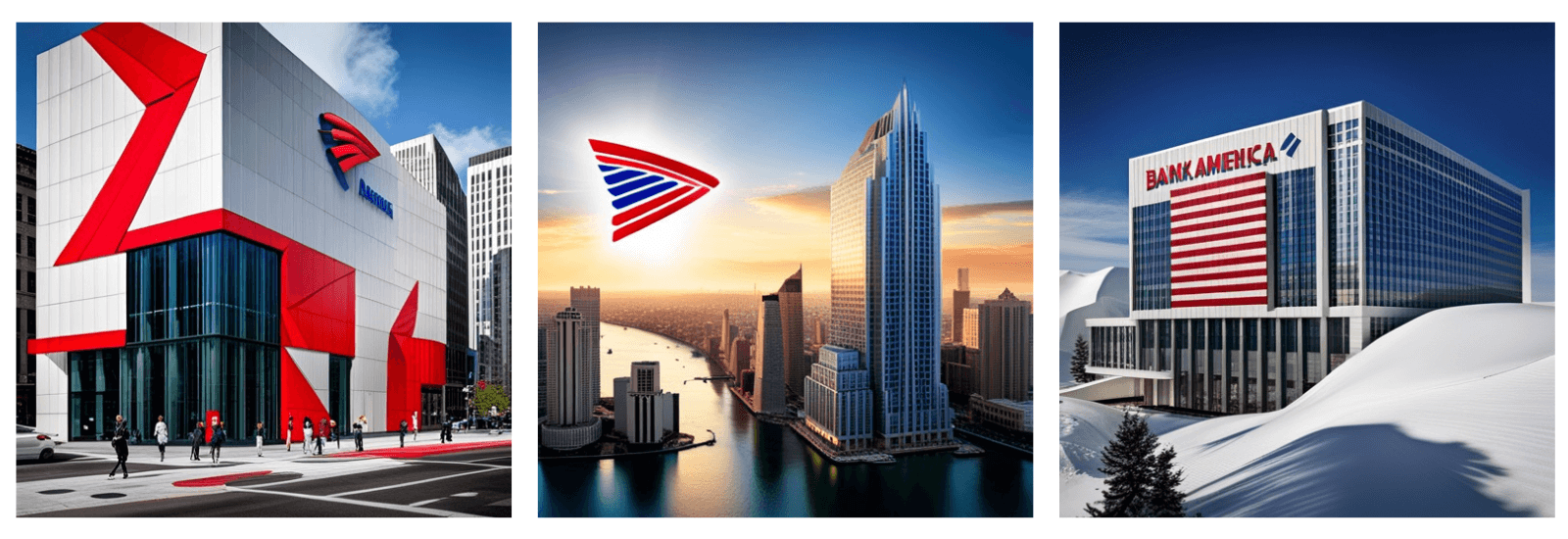

Until now, if a scammer is impersonating a brand by repurposing imagery such as a bank logo, they can often be spotted by cybersecurity tools that identify stolen imagery.

“A lot of solutions today that catch those who impersonate a brand like Chase or Bank of America are very deterministic — if you’re comparing two different images, almost every pixel has to match,” Rao said. “Now someone can generate a logo that looks very similar but is not exactly the same, so the solution won’t catch it.”

Jha said that scammers can create multiple variations of the real logo, for example the Bank of America imagery created below, which can be harnessed on social media and is capable of evading detection by current solutions.

“In the past, scammers used to create images by manually modifying the original image by cropping, rotating, or changing the color scheme to evade detection and victimize people,” Jha said. “Now GenAI can be their tool. It takes less than five seconds to do so.”

The images above were created by inputting the prompt “Dream of a logo for Bank of America” into generative AI software.

Jha and Rao said that future solutions to combat frauds and scams should consider generative AI’s capabilities. Jha said that generative AI platforms themselves are also a potential tool for businesses in the fight against scams and attacks.

“I have examples of banks already using the neural architecture underneath these large language models to detect malicious financial transactions,” Jha said.

Could ChatGPT replace tech jobs?

Rao said that while there is a lot of discussion around whether ChatGPT could eventually replace coders, from a cybersecurity perspective, the problem remained that there were no guarantees the new AI platforms could even generate valid code. For this reason, a popular Q&A tool for developers, Stack Overflow, recently banned answers generated in ChatGPT due to the high potential for inaccuracies. ChatGPT is also susceptible to influencing and can be manipulated to create a desired output.

“We can’t really trust what ChatGPT or a similar tool is saying about the code it’s generating,” Rao said. “So if we cannot trust something ChatGPT says, can someone use that for nefarious purposes? Is there a way that someone can influence what this tool is saying so they can deliberately inject malicious code into systems?”

The panel also discussed another issue facing businesses that want to harness generative AI —copyright. The EU has recently proposed new copyright rules for generative AI, which may prove legally problematic for startups building their products on this technology. Generative AI platforms are trained by scraping information from a vast amount of potentially copyrighted content, making chain-of-title legally murky. Rao said this presents a potential copyright liability risk that startups need to consider when applying this technology.

“So, if a startup is building on top of open AI, what is the risk of that to the startup? For example, if the startup is generating comic art and it looks like Hulk or Mickey Mouse, who’s going to get sued? Is it the startup that’s taking the risk, or is it going to be the open AI platform?” Rao said.

The fully booked Tech Week panel also included UCLA Law faculty Alex Alben; Co-founder and CEO at Open Raven Dave Cole; Principal Product Manager at Amazon Web Services ML & AI, Alexa Prashant Pisipati; Syro Co-founder and CTO Nicole Alonso; and Raj Agarwal from Trust Lab.

Published on July 7th, 2023

Last updated on July 7th, 2023