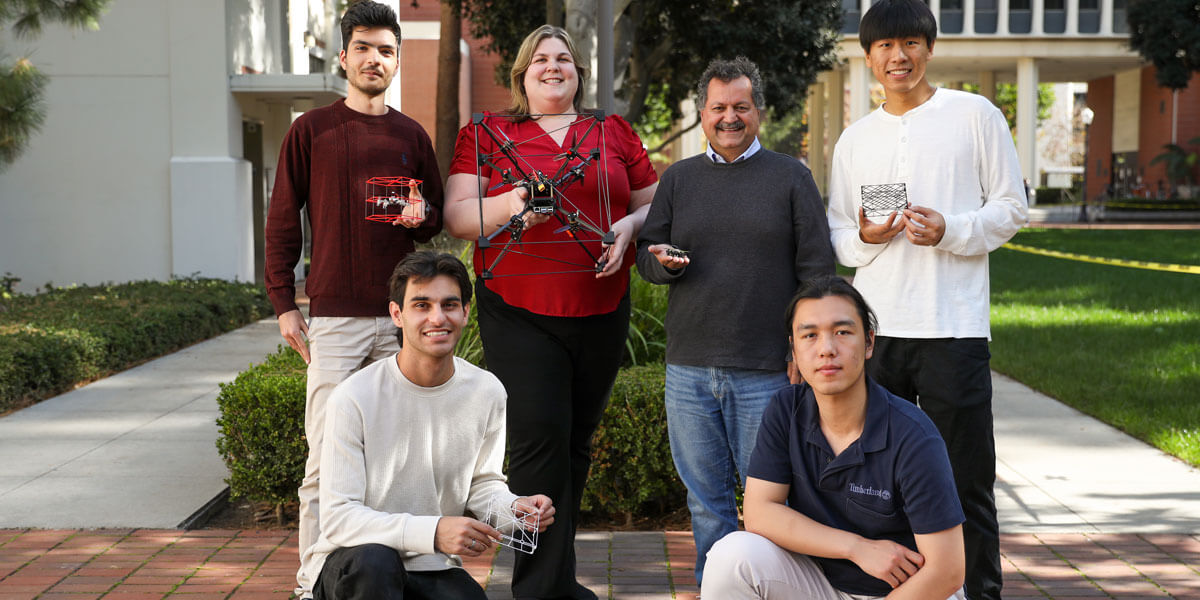

USC researchers led by Shahram Ghandeharizadeh and Heather Culbertson (center) are inching closer to creating a real-world holodeck, using swarms of tiny drones to create 3D objects. Photo/Cooper Brown.

Shaking hands with a character from the Fortnite video game. Visualizing a patient’s heart in 3D—and “feeling” it beat. Touching the walls of the Roman Coliseum—from your sofa in Los Angeles. What if we could touch and interact with things that aren’t physically in front of us? This reality might be closer than we think, thanks to an emerging technology: the holodeck.

The name might sound familiar. In Star Trek’s Next Generation, a holodeck was an advanced 3D virtual reality world that created the illusion of solid objects. Now, immersive technology researchers at USC and beyond are taking us one step closer to making this science fiction concept a science fact.

On Dec. 15, USC hosted the first International Conference on Holodecks. Organized by Shahram Ghandeharizadeh, a USC associate professor of computer science, the conference featured keynotes, papers and presentations from researchers at USC, Brown University, UCLA, University of Colorado, Stanford University, New Jersey Institute of Technology, UC-Riverside, and haptic technology company UltraLeap.

What is a holodeck?

Put simply, said Ghandeharizadeh, holodeck technology is “essentially making virtual objects physically realized.” The difference between holodeck technology, and current VR technology: Holodeck technology allows us to “feel” objects in the virtual environment.

“Think of a holodeck as a very fast 3D printer,” said Ghandeharizadeh, who specializes in multimedia systems and leads USC’s Flying Light Speck (FLS) lab. An FLS is a miniature drone configured with light sources, which could come together and swarm to create virtual objects.

“A holodeck will materialize virtual and real objects,” said Ghandeharizadeh. “In addition to seeing the objects with their naked eyes, users could touch and interact with the illuminated objects using their bare hands.”

While the field is still nascent, Ghandeharizadeh and his research partner Heather Culbertson, USC WiSE Gabilan Assistant Professor of Computer Science, have already received support from the National Science Foundation (NSF) and published several papers on the topic in prominent journals, including ACM Multimedia, ACM MMSys, and the IEEE Symposium on Haptics.

Ghandeharizadeh believes holodecks will emerge as a major discipline in the next 5 to 10 years. If so, before long, holodecks could enable video game designers to bring interactive 3D characters to life, revolutionize how doctors visualize and understand the human body, and even allow scientists to “touch” an atom.

“In a nutshell, a holodeck will revolutionize the future of how we work, learn and educate, play and entertain, receive medical care, and socialize,” said Ghandeharizadeh, who plans to make the conference an annual event.

Above: In this simulation created by Ghandeharizadeh and his team, tiny drones swarm to create an object in the shape of a hat. Once the hardware has miniaturized sufficiently, the team hopes to make this simulation a

reality.

Five Key Takeaways

1. Tiny drones could render complex 3D shapes in seconds

To realize a holodeck, USC researchers are conducting pioneering research with tiny drones equipped with sensors and light sources known as “flying light specks”. For example, imagine pushing a swarm of drones representing a heavy box, with the drones recreating the feeling of weight.

PhD student Yang Chen holds an omnicopter drone, which can exert a large amount of

force safely (notice the propellers are inside the cage). Photo/Cooper Brown.

“We envision swarms of drones, which act the same way as physical objects in terms of interactability,” said Heather Culbertson, a haptics

expert, in her keynote talk titled “Designing and Controlling Haptic Interactions with a Drone.

Yang Chen, a PhD computer science student advised by Culbertson, presented results from a study that demonstrates people can safely feel the resistance generated by a small drone. The study is approved by the Institutional Review Board (IRB), an ethics committee that reviews research involving human subject

“Ensuring the safety of the users is an important consideration and our next step is to investigate user interactions with a swarm of drones,” said Chen.

Hamed Alimohammadzadeh, a co-author of this study and advised by Ghandeharizadeh, presented a decentralized algorithm that enables a swarm of thousands of FLSs to localize and render complex 2D and 3D shapes continuously in seconds. “An algorithm must be

continuous because drones do not stay stationary and drift,” he said.

Above: a USC FLS Lab study demonstrating that people can safely feel the resistance generated by a small

drone.

2. Holodecks are already here (to an extent)

Orestis Georgiou, head of research and development partnerships at UltraLeap and author of six patents, highlighted what has already been accomplished in the holodeck realm. UltraLeap uses mid-air haptics, turning ultrasound into virtual touch. This enables users to feel tactile sensations or feedback in the air, without the need for physical contact with a surface or device. HaptoClone, developed by researchers at the University of Tokyo, also uses ultrasound mid-air haptics and physics to create different sensations, shapes, and textures.

When it comes to the future of holodeck technology, Georgiou said he looks forward to seeing the intersection between AI and media haptics flourish. “I think there’s a lot of potential for using AI to personalize stimuli and also scale the technology,” he said.

Above: HaptoClone, developed by researchers at the University of Tokyo, also uses ultrasound mid-air haptics and physics to create different sensations, shapes, and textures.

3. We can learn about realism in VR from nature and the human body

Shuqin Zhu, a doctoral computer science student advised by Ghandeharizadeh, is using nature-inspired techniques from flocks of birds combined with modern aviation techniques to prevent drones from colliding with one another. “One big challenge is avoiding collisions between millions of drones in a small area,” said Zhu.

Meanwhile, at UCLA, Demetri Terzapoulos, and his team are working on biomimetic—or imitating biology—human simulation. Realistic computer simulation of the human body—including the sensory organs and the brain — is a grand challenge in robotic science and the quest for artificial intelligence and life, said Terzapoulos, who has been studying how to make non-player characters (NPCs) in video games seem more human-like.

Rendering realistic humans is already possible, he said. Making them move in a life-like manner? That’s another story, but he and his team are up for the challenge. “We want to build a virtual brain for our virtual human, trained itself to do all of these tasks just like we do in the real world,” he said.

4. You can look to sci-fi for real-world inspiration

Inspired by computer scientist Ivan Sutherland’s influential 1965 essay, “The Ultimate Display,” Sean Follmer, associate professor of mechanical engineering and computer science at Stanford University, suggests a vision of an advanced computer that goes beyond a traditional screen.

Scene

from the 1973 science fiction film “World on a Wire,” which contains the first TV appearance of an early literary

description of VR.

Sutherland’s essay describes a system that can generate realistic, three-dimensional visual and auditory stimuli, allowing users to interact with a computer-generated environment in a way that closely resembles the real world.

“I think to me, there are two big things that emerge from the vision of Ivan Sutherland’s ultimate display, and the science fiction of the holodeck,” said Follmer. “This notion that what you see is what you feel and that you can be in a [simulated] place, but also experience it physically”

Creating interfaces that allow for richer physical interaction, said Follmer, could also help people with different abilities, including people with visual impairments, better understand and interact with information.

5. Holodecks could help doctors better understand their patients’ conditions

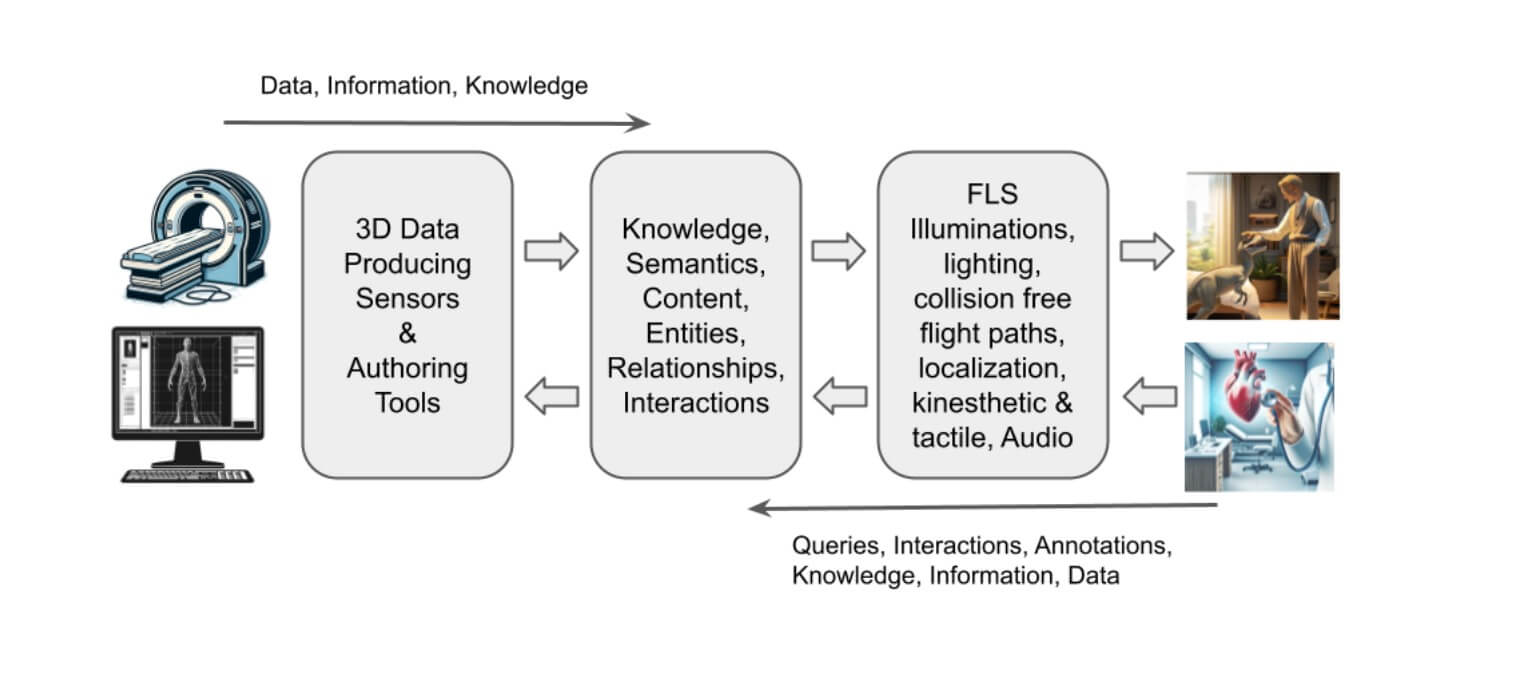

Presenting a paper titled “A Conceptual Model of Intelligent Multimedia Data Rendered using Flying Light Specks,” lead author Nima Yazdani, a PhD computer science student advised by Ghandeharizadeh, explains how their technology could help doctors use the 3D data produced by magnetic resonance imaging (MRI) to better understand their patients’ conditions. “MRIs produce 3-D data, but pretty much all the data is limited because the physician has to observe it on a 2-D screen,” said Yazdani. “We want to tightly integrate [3D light specks] with MRI data so that the physician can, for example, look at the brain in three dimensions, interact with it, feel it, and touch it.”

In their paper, USC researchers propose a method to integrate 3D displays into the eco-system of today’s devices to facilitate advanced interactions with medical data. Yazdani et al.

Published on February 1st, 2024

Last updated on May 16th, 2024