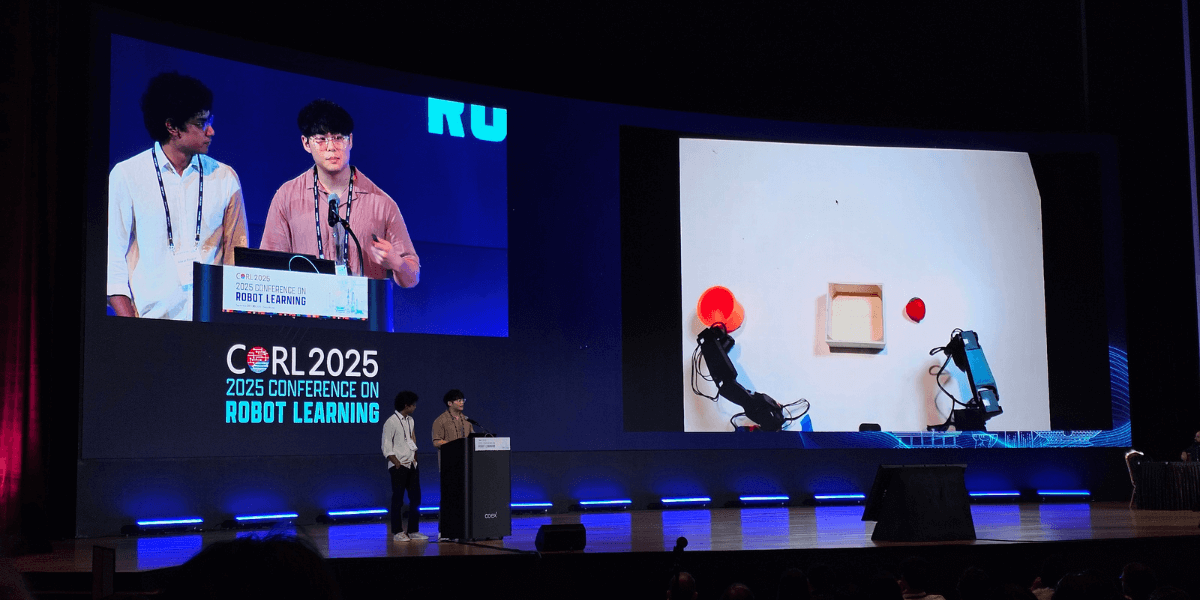

USC PhD student Abrar Anwar and USC alumnus Jesse Zhang presented at CoRL 2025. (Photo by Erdem Bıyık)

USC researchers presented pioneering work at the Conference on Robot Learning (CoRL) 2025, held September 27–30 in Seoul, Korea. The USC-affiliated papers featured collaborations across different labs from the USC Viterbi School of Engineering and the USC School of Advanced Computing’s Ming Hsieh Department of Electrical and Computer Engineering and Thomas Lord Department of Computer Science. Their work showcased cutting-edge progress in robotics, from language-guided robot learning and multitask policy evaluation to scalable data generation, benchmarking AI models, and advanced manipulation. These breakthroughs further underscore USC’s leadership in advancing the science of robot learning and its real-world impact.

USC-Affiliated Papers

(USC authors boldened)

ReWiND: Language-Guided Rewards Teach Robot Policies without New Demonstrations

Jiahui Zhang, Yusen Luo, Abrar Anwar, Sumedh Anand Sontakke, Joseph J Lim, Jesse Thomason, Erdem Biyik, Jesse Zhang

Session: Manipulation I

Abstract:

We introduce ReWiND, a framework for learning robot manipulation tasks solely from language instructions without per-task demonstrations. Standard reinforcement learning (RL) and imitation learning methods require expert supervision through human-designed reward functions or demonstrations for every new task. In contrast, ReWiND starts from a small demonstration dataset to learn: (1) a data-efficient, language-conditioned reward function that labels the dataset with rewards, and (2) a language-conditioned policy pre-trained with offline RL using these rewards. Given an unseen task variation, ReWiND fine-tunes the pre-trained policy using the learned reward function, requiring minimal online interaction. We show that ReWiND’s reward model generalizes effectively to unseen tasks, outperforming baselines by up to 2.4X in reward generalization and policy alignment metrics. Finally, we demonstrate that ReWiND enables sample-efficient adaptation to new tasks in both simulation and on a real bimanual manipulation platform, taking a step towards scalable, real-world robot learning.

Efficient Evaluation of Multi-Task Robot Policies With Active Experiment Selection

Abrar Anwar, Rohan Gupta, Zain Merchant, Sayan Ghosh, Willie Neiswanger, Jesse Thomason

Session: Humanoid & Hardware

Abstract:

Evaluating learned robot control policies to determine their performance costs the experimenter time and effort. As robots become more capable in accomplishing diverse tasks, evaluating across all these tasks becomes more difficult as it is impractical to test every policy on every task multiple times. Rather than considering the average performance of a policy on a task, we consider the distribution of performance over time. In a multi-task policy evaluation setting, we actively model the distribution of robot performance across multiple tasks and policies as we sequentially execute experiments. We show that natural language is a useful prior in modeling relationships between tasks because they often share similarities that can reveal potential relationships in policy behavior. We leverage this formulation to reduce experimenter effort by using a cost-aware information gain heuristic to efficiently select informative trials. We conduct experiments on existing evaluation data from real robots and simulations and find a 50% reduction in estimates of the mean performance given a fixed cost budget. We encourage the use of our surrogate model as a scalable approach to track progress in evaluation.

Granular loco-manipulation: Repositioning rocks through strategic sand avalanche

Haodi Hu, Yue Wu, Daniel Seita, Feifei Qian

Session: Humanoid & Hardware

Abstract:

Legged robots have the potential to leverage obstacles to climb steep sand slopes. However, efficiently repositioning these obstacles to desired locations is challenging. Here we present DiffusiveGRAIN, a learning-based method that enables a multi-legged robot to strategically induce localized sand avalanches during locomotion and indirectly manipulate obstacles. We conducted 375 trials, systematically varying obstacle spacing, robot orientation, and leg actions in 75 of them. Results show that movement of closely-spaced obstacles exhibit significant interference, requiring joint modeling. In addition, different multi-leg excavation actions could cause distinct robot state changes, necessitating integrated planning of manipulation and locomotion. To address these challenges, DiffusiveGRAIN includes a diffusion-based environment predictor to capture multi-obstacle movements under granular flow interferences and a robot state predictor to estimate changes in robot state from multi-leg action patterns. Deployment experiments (90 trials) demonstrate that by integrating the environment and robot state predictors, the robot can autonomously plan its movements based on loco-manipulation goals, successfully shifting closely located rocks to desired locations in over 65% of trials. Our study showcases the potential for a locomoting robot to strategically manipulate obstacles to achieve improved mobility on challenging terrains.

ManipBench: Benchmarking Vision-Language Models for Low-Level Robot Manipulation

Enyu Zhao, Vedant Raval, Hejia Zhang, Jiageng Mao, Zeyu Shangguan, Stefanos Nikolaidis, Yue Wang, Daniel Seita

Session: Planning & Safety & Robustness

Abstract:

Vision-Language Models (VLMs) have revolutionized artificial intelligence and robotics due to their commonsense reasoning capabilities. In robotic manipulation, VLMs are used primarily as high-level planners, but recent work has also studied their lower-level reasoning ability, which refers to making decisions about precise robot movements. However, the community currently lacks a clear and common benchmark that can evaluate how well VLMs can aid low-level reasoning in robotics. Consequently, we propose a novel benchmark, ManipBench, to evaluate the low-level robot manipulation reasoning capabilities of VLMs across various dimensions, including how well they understand object-object interactions and deformable object manipulation. We extensively test 35 common and state-of-the-art VLM families on our benchmark, including variants to test different model sizes. The performance of VLMs significantly varies across tasks, and there is a strong correlation between this performance and trends in our real-world manipulation tasks. It also shows that there remains a significant gap between these models and human-level understanding.

D-CODA: Diffusion for Coordinated Dual-Arm Data Augmentation

I-Chun Arthur Liu, Jason Chen, Gaurav S. Sukhatme, Daniel Seita

Session: Humanoid & Hardware

Abstract:

Learning bimanual manipulation is challenging due to its high dimensionality and tight coordination required between two arms. Eye-in-hand imitation learning, which uses wrist-mounted cameras, simplifies perception by focusing on task-relevant views. However, collecting diverse demonstrations remains costly, motivating the need for scalable data augmentation. While prior work has explored visual augmentation in single-arm settings, extending these approaches to bimanual manipulation requires generating viewpoint-consistent observations across both arms and producing corresponding action labels that are both valid and feasible. In this work, we propose Diffusion for COordinated Dual-arm Data Augmentation (D-CODA), a method for offline data augmentation tailored to eye-in-hand bimanual imitation learning that trains a diffusion model to synthesize novel, viewpoint-consistent wrist-camera images for both arms while simultaneously generating joint-space action labels. It employs constrained optimization to ensure that augmented states involving gripper-to-object contacts adhere to constraints suitable for bimanual coordination. We evaluate D-CODA on 5 simulated and 3 real-world tasks. Our results across 2250 simulation trials and 180 real-world trials demonstrate that it outperforms baselines and ablations, showing its potential for scalable data augmentation in eye-in-hand bimanual manipulation. Our anonymous website is at: https://dcodaaug.github.io/D-CODA/.

Robot Learning from Any Images

Siheng Zhao, Jiageng Mao, Wei Chow, Zeyu Shangguan, Tianheng Shi, Rong Xue, Yuxi Zheng, Yijia Weng, Yang You, Daniel Seita, Leonidas Guibas, Sergey Zakharov, Vitor Campagnolo Guizilini, Yue Wang

Session: Planning & Safety & Robustness

Abstract:

We introduce RoLA, a framework that transforms any in‑the‑wild image into an interactive, physics‑enabled robotic environment. Unlike previous methods, RoLA operates directly on a single image without requiring additional hardware or digital assets. Our framework democratizes robotic data generation by producing massive visuomotor robotic demonstrations within minutes from a wide range of image sources, including camera captures, robotic datasets, and Internet images. At its core, our approach combines a novel method for single-view physical scene recovery with an efficient visual blending strategy for photorealistic data collection. We demonstrate RoLA’s versatility across applications like scalable robotic data generation and augmentation, robot learning from internet images, and single-image real-to-sim-to-real systems for manipulators and humanoids.

Note: Every effort was made to include all USC Viterbi-affiliated papers. If you believe your work was inadvertently left out, please let us know at ece-comm@usc.edu so we can update the list.

Published on October 3rd, 2025

Last updated on October 3rd, 2025