Photo Credit: Nadezhda Kurbatova/iStock

“What if you had your very own personalized ChatGPT that could serve as your representative and handle trivial daily conversations?” asked Jonathan May, research associate professor in the USC Viterbi School of Engineering’s Thomas Lord Department of Computer Science.

Most dialogue models like ChatGPT are designed to speak to you, in the form of a helpful assistant, rather than speak for you. But if a dialogue model could mimic you, it could be more than an assistant – it could be a personal AI representative, generating high quality responses in your tone. May and a group of researchers at the USC Information Sciences Institute (ISI), where May is a team leader, have proposed RECAP – a method for guiding a dialogue model to mimic you in conversations by learning from the text you’ve written.

Finding the Right Next Thing to Say… the Way You’d Say It

“A language model like ChatGPT – which is more formally known as a generative pretrained transformer – takes in the current conversation, forms a probability for all of the words in its vocabulary given that conversation, and then chooses one of them as the likely next word,” explained May.

The question these researchers have posed is, can that “likely next word” be in line with a user’s personality?

Adding personality to chatbots isn’t a new idea. Researchers often train dialogue models to match a user’s personality using surveys filled out by users, or by simply repeating previous messages a user has written. The RECAP team took a different approach – looking at how to infer a user’s persona based on just the things they’ve previously written, taking into account both content and style.

May gave the example of how, using RECAP, he would build a chatbot with his own persona: “We specifically created methods that will find text that I have written before that is most relevant to the current conversation, and most likely to help a model come up with the right next thing – the thing that sounds best while also staying on topic.”

You Are What You Write

The team developed a system of underlying language models that will help find that likely next word using Reddit as the data source for user-written text.

May explained: “You give the model a history of the context of a conversation – everything that’s happened up until now – and ask, ‘return similar sentences from my past.’” But, he pointed out, “similar is vague. Similar can mean ‘on topic for the conversation’; and similar can mean ‘like you.’” In other words, if a chatbot is filling in words for you, it should be similar to you in both content and style.

He explained the team’s methodology: “In some cases, we only looked for sentences that were topic relevant. And then in other cases, only the sentences that were personality relevant. And then we have cases where we took a mix of both.” In the end, the mix worked best.

A Chatbot That Knows Your Taste in Movies (And More)

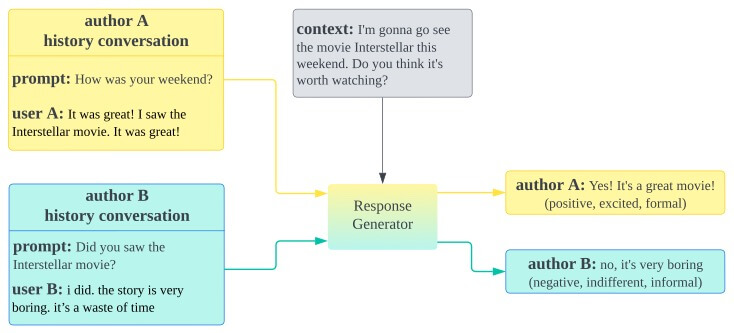

As a simple example, let’s say the model is asked “I’m gonna go see the movie “Interstellar” this weekend. Do you think it’s worth watching?”

May explained that, given conversation histories around their weekends, the movie “Interstellar,” and their tone and style, the RECAP model could generate different responses for different authors. “Author A might say, ‘Yes! It’s a great movie!’ and Author B might say, ‘No, it’s very boring.’”

“This is a very simple case where Author A has had a previous conversation with somebody and was asked, ‘How was your weekend?’ And they said ‘It was great! I saw the “Interstellar” movie. It was great!’ And in a similar conversation, Author B said: ‘I did. the story is very boring. It’s a waste of time.’”

The example, as illustrated below, shows that RECAP will generate a response that is similar in content and style to a given author’s previous conversations. This example is not a real response but illustrates how the model would accomplish the task.

What’s Next

The team sees many applications for their research. To start, your autofill might just know you a little better.

“If it’s deployed properly in short and casual communications — think: autofill in your email — it’s not that you’ll notice that it’s your persona, you’ll just be able to hit Tab more often and save yourself some time,” said May. Other potential use cases: having a more satisfying experience when chatting with a chatbot; creating chatbot chat room moderators that relate better to users; authorship identification; privatization, phishing prevention and more.

“RECAP: Retrieval-Enhanced Context-Aware Prefix Encoder for Personalized Dialogue Response Generation,” written by Shuai Liu, Hyundong J. Cho, Marjorie Freedman, Xuezhe Ma, and May, will be presented as a poster at the 61st Annual Meeting of the Association for Computational Linguistics (ACL’23), which is taking place in Toronto, Canada from July 9 to July 14, 2023.

Published on July 10th, 2023

Last updated on May 16th, 2024