A new processor that uses light, instead of electrons, has the potential to perform its computations up to 100 times more powerfully and efficiently than existing processors inside machine-learning computer systems. That’s according to a new USC and MIT study published in the journal Nature Photonics.

Zaijun Chen

“In addition to vastly improving the scalability of computing power, the optical artificial-intelligence processor increased energy efficiency compared with current technologies,” said Zaijun Chen, a research assistant professor at the USC Viterbi School of Engineering’s Ming Hsieh Department of Electrical and Computer Engineering. “This could be a game-changing factor in the effort to overcome the electronic bottlenecks for the kind of computing architecture needed to run AI systems in the future.”

Chen, the lead author of the paper, performed the work while he was a postdoctoral associate at MIT in the Research Laboratory of Electronics (RLE).

The research examines deep neural networks (DNNs) that power AI-generative innovations like ChatGPT. But the networks require giant amounts of energy and space. Training the GPT-4 (the machine learning model behind ChatGPT) requires 25,000 graphics processing units (GPUs) running over the course of 90 days. The website Towards Data Science estimates the training costs to be about 50 megawatt-hours (MWh) of electricity, enough to power about 2,000 households. That generation also releases about 12,000 tons of carbon dioxide.

The advent of optical neural networks (ONNs), based on the movement of light, helps information move more quickly and efficiently over a smaller space. The research team decided to see if ONNs would be a better tool, but they recognized that they also require high energy usage “due to their low electro-optic conversion efficiency, low compute density due to large device footprints and channel crosstalk, and long latency due to the lack of inline nonlinearity,” the authors wrote.

Artist’s view of a VCSEL-based optical neural networks with 3D connectivity. (Illustration: Ella Maru Studio)

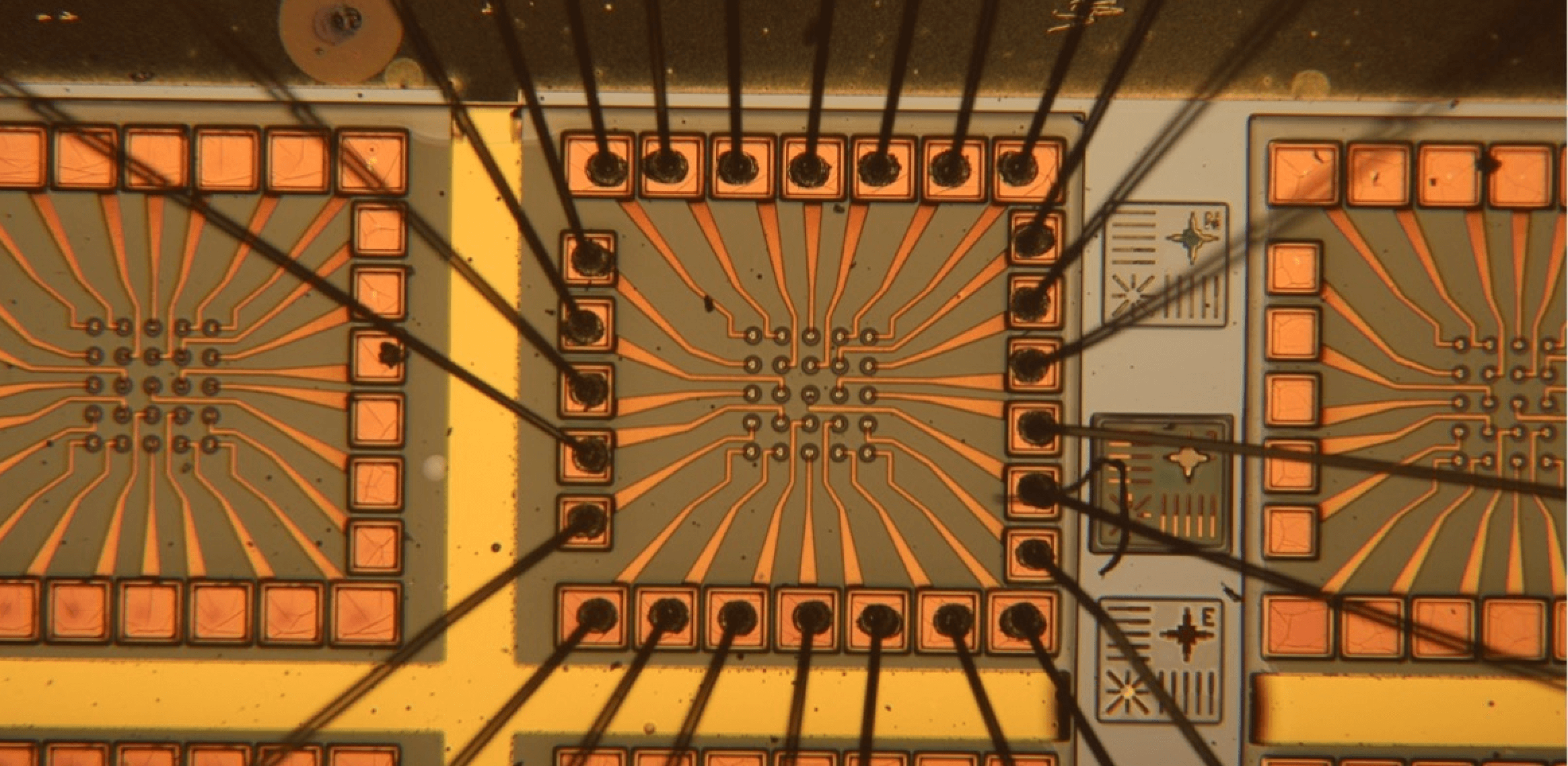

Using an ONN equipped with a laser array, the scientists were able to overcome those challenges. More than 400 lasers are integrated on a photonic chip area of 1 square centimeter in the demonstration. Each laser, with a diameter of only one-tenth of the human hair, can convert data from the electronic memory to the optical field at the clock rate 10 GHz (10 billion neural activations per second in neural network language). And surprisingly, each conversion consumes only several attojoules (1 attojoule = 10-18 joules, about the energy of 10 single photons in visible wavelengths). That is five to six orders of magnitude lower than state-of-the-art optical modulators.

The study authors have even greater hopes for future improvement: Beyond the 100-fold leap in both output and energy efficiency, “near-term development could improve these metrics by two more orders of magnitude,” they wrote. The new technology “opens an avenue to large-scale optoelectronic processors to accelerate machine-learning tasks from data centers to decentralized edge devices” like cell phones, the paper states.

About the study

Zaijun Chen of USC, Ryan Hamerly of NTT Research, and Dirk Englund are the corresponding authors. Other co-authors who also contributed significantly are Alexander Sludds, Ronald Davis, Ian Christen, Liane Bernstein, and Lamia Ateshian of RLE. The key component of this computing system, VCSEL arrays, is fabricated and provided by Tobias Heuser, Niels Heermeier, James A. Lott, and Stephan Reitzensttein of Technische Universitat Berlin.

The study was funded by the U.S. Army Research Office, NTT Research, the National Defense Science and Engineering Graduate Fellowship Program, the National Science Foundation, the Natural Sciences and Engineering Research Council of Canada and the Volkswagen Foundation.

Published on August 8th, 2023

Last updated on March 7th, 2024