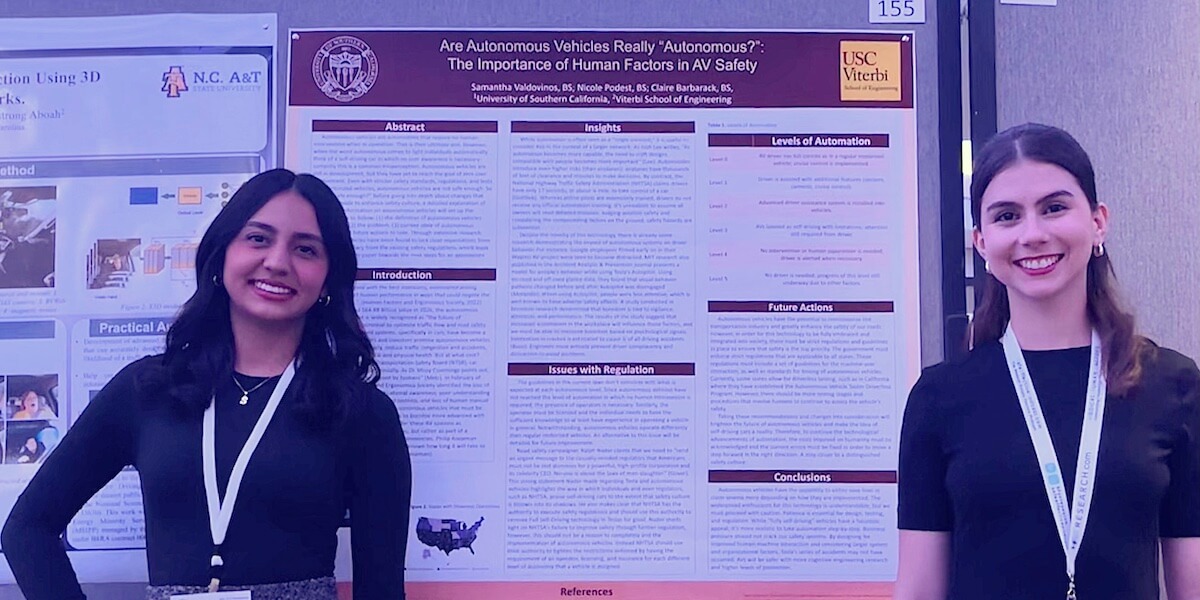

Samantha Valdovinos and Nicole Podest presenting their research at the HFES conference.

Samantha Valdovinos, Nicole Podest, and Claire Barbrack recently presented their research on autonomous vehicle safety (AVs) at the Human Factors and Ergonomics Society Conference in Washington, D.C. on October 25. Valdovinos and Podest are seniors in the Daniel J. Epstein Department of Industrial and Systems Engineering, while Barbrack is a class of 2023 B.S. ISE alumna who wrote an undergraduate honors thesis on safety surrounding AI and autonomous vehicles. Barbrack was also named the 2023 Daniel J. Epstein Department of Industrial and Systems Engineering Student of the Year.

Valdovinos, Podest and Barbrack completed the ISE 370 Human Factors in Work Design course taught by Professor of Civil and Environmental Engineering, Industrial Systems Engineering, and International Relations Najmedin Meshkati. Meshkati encourages his students to research important issues that affect society and have public policy implications.

USC Viterbi News spoke with Valdovinos, Podest and Barbrack about their research.

What is your research about?

Autonomous vehicles. What are they? When people hear the words “autonomous vehicles,” they think of self-driving cars, and when self-driving cars come up, people then link this to Tesla. We, too used to automatically consider Tesla to be a self-driving car. Although Teslas may have the capability to do so in the near future, what individuals need to know is that these vehicles are not at that level of no human intervention. They have yet to reach the goal of zero user involvement. This is what has guided our research: informing the public of the limitations of autonomous vehicles like Tesla, as well as the safety precautions and standard regulations individuals need to be aware of that should be improved.

In greater detail, the research of our paper titled, “Are Autonomous Vehicles Really “Autonomous?”: The Importance of Human Factors in AV Safety,” consists of (1) the definition of autonomous vehicles (background), (2) the problem, (3) current state of autonomous vehicles and (4) future actions to take. Through our research, we hope to help individuals understand the current potential of autonomous vehicles in order to improve human factors in safety culture.

What was the experience like presenting your paper “Are Autonomous Vehicles Really “Autonomous?”: The Importance of Human Factors in AV Safety” at the HFES conference?

Presenting our paper was an exciting experience. Human Factors and Ergonomics experts approached us and asked insightful questions that sparked discussions. We met professionals in the field of human factors and ergonomics, professors from several universities, as well as fellow students. In these interactions, we appreciated the opportunity to engage in conversations and receive feedback from automation experts. We were glad to hear from others who had conducted similar research, learning from their findings, and helping move our research in the right direction with new insight. Above all, we had a great time being able to share our research and represent the University of Southern California and the Daniel J. Epstein Department of Industrial and Systems Engineering.

What inspires you most about your research?

Soon, autonomous vehicles will be as common as motorized vehicles are now. This possibility has inspired the direction of our research. Informing individuals about autonomous vehicles while they are still not in full development will help society in the future when autonomous vehicles fill the streets. So not only does our research inform individuals about the current state of autonomous vehicles and the limitations that are associated with them now, but our research also allows the public to understand the background and factors involved in a topic that will only become more relevant with time. Our research will also make individuals aware that the current safety regulations imposed on autonomous vehicles are not as strict as they need to be, which will help guide their improvement for future implementation of generations to come.

How do you hope your findings will contribute to the field and influence future work?

While we understand how this new technology can be exciting, we hope to spread awareness about the potential danger of autonomous vehicles if not used properly. We expect drivers and automotive companies to understand the levels of automation more in-depth and how cars do not exclusively have to be autonomous or not autonomous. There are different levels of automation. We currently are at an approximate level of 2.5 out of the 6 levels of automation, in which there is a driver assistance system, but driving still requires attention from the driver; we estimate that we are between levels 2 (advanced driver assistance system is installed into vehicles) and 3 (AVs are labeled as self-driving with limitations; attention is still required from the driver). As a result, safety regulations, training, or even warnings are crucial to implement in order for a driver to understand that we have not yet reached the point where cars are fully self-driving. Our technology is changing, and so should the methods by which we handle safety concerns.

Are there any real-world applications or examples you can share that would help illustrate the impact of your findings?

More than seven years ago on May 7th, 2016, Joshua Brown was killed in a Tesla accident when involved in a collision with a tractor-trailer. The Tesla had been utilizing the Autopilot feature in the advanced driver assist system, which is standard in all new Tesla vehicles. When it comes down to who is at fault for the collision, it is difficult to decide whether the Tesla user or the Tesla itself is to blame. Professor Najmedin Meshkati expressed his thoughts on this accident, and on autonomous vehicles in a short piece in the New York Times, and in an extensive interview on the Viterbi website. He said, “We need to ensure we’ve tested the technology before unleashing it on the public. It’s the job of government agencies to scrutinize, and they need to be chronically skeptical and have a chronic unease when it comes to this new safety-sensitive technology and be over-vigilant.”

Published on December 11th, 2023

Last updated on December 11th, 2023