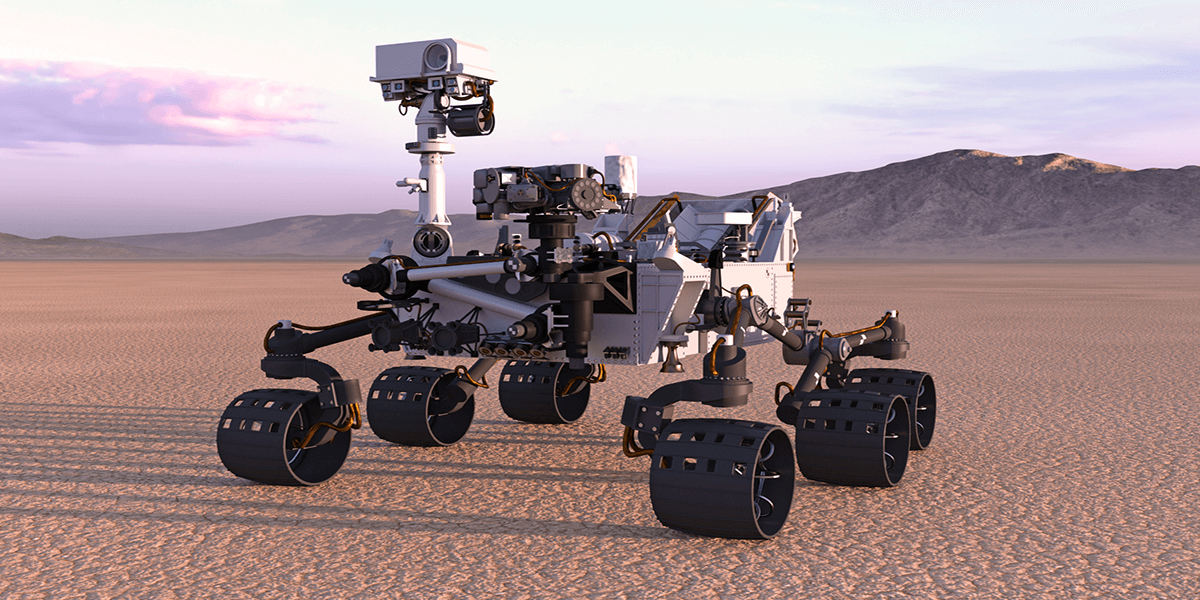

The students’ research imagines a future where rovers could work in teams on remote planets far from home. Photo/iStock.

What if a team of rovers could scour the landscape of an alien planet for water or signs of life completely autonomously, millions of miles from Earth?

Four USC Viterbi students’ award-winning research imagines a near future where this is possible, using an up-and-coming technology called edge computing. This method, which forfeits centralized servers, allows autonomous robots to process everything they see or do on the spot.

The team’s entry won first place at the 2nd Student Design Competition on Networking Computing on the Edge, an international competition held in conjunction with CPS-IoT Week in May, which focused on potential future applications of edge computing. They demonstrated how a graph convolutional neural network, or GCN, could increase the computing efficiency of robots that do not use a central server. In other words, the robot can solve problems or figure out what to do at a much faster rate by “learning” from previous computations and working with other nearby robots.

“As these robots complete their tasks, they collaborate with each other to do whatever computation is needed,” said Bhaskar Krishnamachari, a professor of electrical and computer engineering and the team’s faculty advisor. “The project focuses on how these robots can best allocate their resources to work together when they are performing complex computations.”

Learning on the edge

The group met back in Fall of 2021, when Krishnamachari brought together an eager undergraduate student, Daniel D’Souza, and three of his Ph.D. students -– Mehrdad Kiamari, Lillian Clark, and Jared Coleman — to tackle a particular facet of computing called task scheduling.

Much like a human, an autonomous robot can break down larger tasks into a series of smaller steps, or a task schedule, to figure out what they should prioritize or do first. However, many of these robots’ functions—like exploration or military reconnaissance—are too complicated to function in a step-by-step process, so the team proposed a ‘task graph,’ where the tasks branch out into a network.

“For real-world applications like genome sequencing, there are several thousands of tasks to do,” explained Kiamari, a Ph.D. student in electrical engineering.

To cut down on the amount of time it takes for a robot to figure out what to do when faced with an extremely complex set of tasks, Kiamari and Krishnamachari developed the GCNScheduler, software that uses a neural network to “learn” how to schedule tasks faster.

This increased speed in scheduling is ideal for the more advanced functions of an interstellar drone, such as analyzing images or mapping out a path, according to Clark, a Ph.D. student in electrical engineering.

“The scheduler gets as much as possible out of graph structures, like in the case of image detection,” said Clark. “This is really useful if you’re doing something like looking for signs of life or water melting on another planet.”

Put to the test

The team demonstrated the effects of the scheduler with four mobile “Turtlebots,” small rover-like robots that can operate with a simple Raspberry Pi interface. With the GCNScheduler software installed, a bot would stop scheduling tasks after it rolled out of communications range—which, on this scale, is down the hallway.

The signal strength between robot “nodes” fluctuates in real-world scenarios, and the experiment was designed to show how the GCNScheduler would respond. This adaptation is essential for robots programmed to carry out more sophisticated actions, like surveillance or facial recognition, where they might be constantly on the move or need to carry out extremely complicated functions.

The applications of the scheduler have potential in myriad industries as autonomous robots are taking on more responsibilities, making the choice to combine GCNScheduler with edge computing an obvious one, according to D’Souza, a computer engineering and computer science student.

“Autonomous robots are going to be used to perform security facial recognition, baggage handling services, coordinating crops, military reconnaissance,” D’Souza said. “They’re popping up in all these various fields, and they’re going to be used more and more in the real world.”

Published on August 16th, 2022

Last updated on October 14th, 2022