Artificial intelligence researcher Paul Cohen is fascinated by the ability of human infants to learn how the physical world works. He hopes to give machines some of this ability, as part of a multipart $4 million research program now underway at the University of Southern California Information Sciences Institute, a program that will attempt to watch computer agents learn elements from the classic On War.

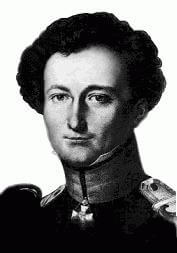

Carl von Clausewitz

More generally, Cohen is trying to give machines the ability babies develop as they mature – the ability to use the relations they see in the physical world as metaphors for description and understanding. “Machines don’t have common sense,” says Cohen, who holds an appointment as a research professor in the Viterbi School department of computer science in addition to his ISI role as deputy director of the institute’s intelligent systems division.

Common sense in artificial intelligence, he explains, refers (among other elements) to the ability to understand how physical objects move and interact in the everyday world, that cups can stand on a table, or fall and break, knowledge that is second nature to humans but utterly opaque to computers.

In the past, researchers’ attempts “to shovel in the information by hand have had limited success,” says Cohen: after fifteen years of such effort, “to a certain extent, machines can predict behavior of objects, but they still don’t have a causal understanding. They can’t explain what they know.”

This lack in turn inhibits computer ability to “plan or do anything,” which limits both their ability to communicate with humans, who use their shared experience of the world to give new, abstract meanings to words like “block,” “fall,” “push,” “contain,” “momentum,” “balance,” and many more.

Cognitive theorists call such base concepts “image schemas,” and have, over the past two decades, described more than 40.

“Image schemas are general-purpose representations that impose structure on our experiences. Image schemas represent perceptual properties and relationships such as locations, movement, and verticality; they also capture elementary physical operations and structures, such as containment and collection,” says North Carolina State university computer scientist and theorist Robert St. Amant, a specialist in the topic, whose work Cohen’s project builds upon.

St. Amant, working at ISI on a year’s sabbatical from North Carolina, has developed a way for computers to use such comparisons — an image schema language that can be used to aid problem solving in practical reasoning domains, in what he calls a “metaphorical” manner.

A key element is “Image Schema Language,” a programming strategy he began developing a early in 2005 with Cohen and Cohen’s wife, cognitive psychologist Carole Beal, who is also a collaborator on the current project.

The diagram (click for larger image) shows the representation of the image schema for “blockage” in ISL.

The ISL underlies the project, in which “agents” – individual artificial intelligence entities – will be set loose in virtual environments, exploring them and, the team hopes learning to understand them well enough not just to make plans for action but also to explain their plans to researchers.

The process begins with perception. The agents see other objects in their world (such worlds can anything from a simple grid to a chessboard to a military battlefield). “They perceive distances to objects, whether or not they are in contact with objects, and other geographical locations,” says St. Amant.

“This information is instantaneous and static,” explains St. Amant. “But objects move and interact with each other, and so the agent must have the ability to track these relationships in time,” as what are called “dynamic schemas'” in the ISL. Some of these relationships, he explains, combine to form recognizable, discrete steps, or states, over time.

Finally, he says, “if the agent has goals, it must take specific actions, that will reach its desired state.” The steps are called an “action schema.”

Concretely, St. Amant talks about the agent perceiving a cat, and developing a strategy to contact the cat, by moving in the cats’ direction, changing direction if the cat moves. (See diagram).

The record of the motions perceived over time in such transaction form a graphable pattern in the agents’ memory. The plan to endow the agents with metaphor-understanding ability is based on these patterns: if similar time-based patterns of excitation occur in other contexts, the agent will use the similarity of its subjective experience to compare the processes.

While the relations perceived are subjective for the agent, the agent will be able to “explain” the basis for its making of comparisons – and decisions based on the comparisons – to researchers.

The hope of the researchers is that the agents will learn to appropriately put together such schemas into “Gists” which they can then use to more effectively act in the context of their environments.

“While on one hand we are just teaching our agent to do what a baby cat naturally does in learning about its environment, our hope is that, unlike the cat, the agent once trained will be able to explain what it’s doing.

The environment that the agents will be trying to understand and explain is military, troops and units moving in a battlefield environment. The German military theorist Carl von Clausewitz famously used the language of physics and mechanics to describe military problems — putting such ideas as friction, momentum, impact to use in understanding what happens in battles.

With appropriately mapped schemas the agent players, it’s hoped, will learn Clausewitzean lessons – and be able to explain them.

The overall project consists of four separate efforts, funded by DARPA and the NRL, that will coordinate toward this end.

Published on January 10th, 2006

Last updated on June 10th, 2024