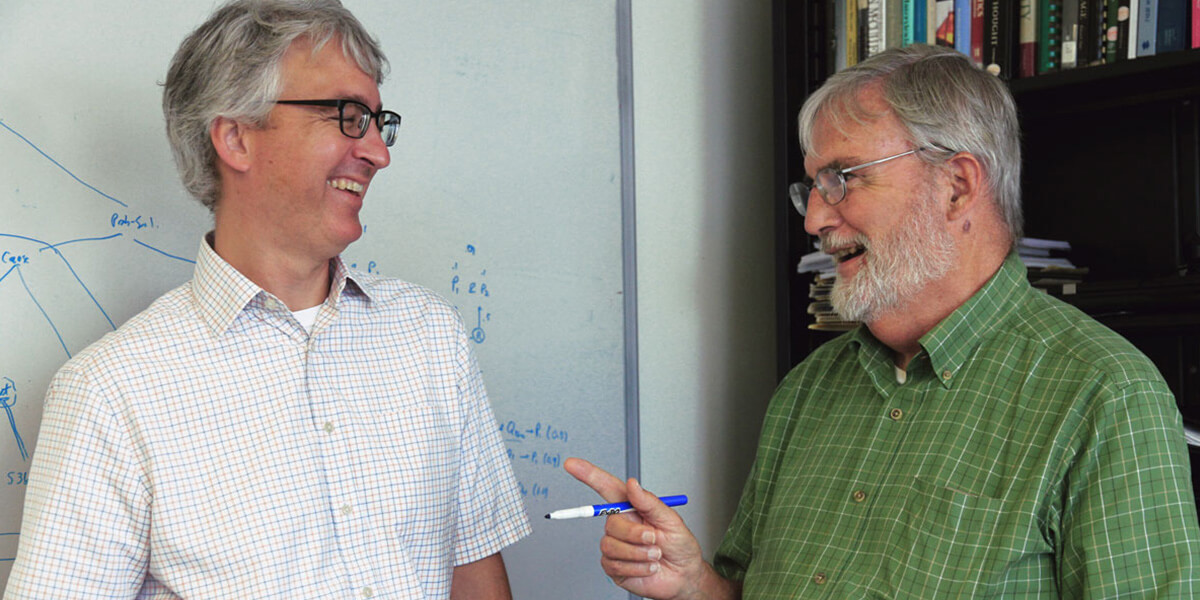

Fifteen years in the making, a new book by ICT’s Andrew Gordon (left) and ISI’s Jerry Hobbs, dubbed “a computer’s guide to humans,” hits the shelves September 7. Photo credit/Caitlin Dawson

Computers can outperform the greatest minds in many challenges, but while new approaches in machine learning are making in huge strides, from classifying images to understanding languages, machines still fall short in other areas.

Despite excelling in chess and solving complex computations, when it comes to understanding the pain of heartbreak or recognizing the emotional power of a Rothko painting, humans still come out on top.

But will that always be the case?

In a new book, dubbed “a computer’s guide to humans,” USC Information Sciences Institute chief scientist Jerry Hobbs and Institute for Creative Technologies director for interactive narrative research Andrew Gordon provide a linguistic framework to help computers understand our mysterious human ways, from emotions and beliefs to planning and memory.

Fifteen years in the making, A Formal Theory of Commonsense Psychology: How People Think People Think, will be published by Cambridge University Press on September 7, 2017.

Hobbs and Gordon hope this in-depth, ambitious exploration of human commonsense reasoning will serve as a foundation for the development of human-like artificial intelligence.

“At the moment, the computer interface is your desktop, but you want it to be more like a companion.”, says Hobbs. “In order to do that, the computer needs to know something about you and your inner mental life. That’s what we hope this book will address.”Jerry Hobbs

Cogito ergo sum?

If we want to create the friendly, co-operative robots of science fiction (think: C-3PO), we need to first equip them with a fundamental feature almost completely missing from current AI systems: theory of mind.

Otherwise known as “commonsense psychology,” theory of mind refers to the ability to attribute mental states such as beliefs, desires, goals and intentions to others, and to understand that these states are different from our own.

“The main question for me is how do we use our commonsense knowledge to interpret language. Once a computer understands the language, it can do lots of things: question and answer, translation, building knowledge structures,” says Hobbs, a renowned natural language processing expert.

“As far as something like Siri goes, there are a lot of capabilities built into it, but all it’s doing is matching patterns – you can’t do anything novel.”

Hobbs has been working on representing knowledge in artificial intelligence since the 1970s, decades before Siri was even a glint in Steve Jobs’ eye.

In 2002, when he joined ISI, he and Gordon embarked on creating what they hoped would be the largest logical formalization of commonsense psychology ever written.

First, Gordon collected 372 strategies in 10 different domains including politics, interpersonal relationships and artistic performance, and interviewed experts in each field. Then, he reduced those strategies to a controlled vocabulary of about 1,000 items.

The researchers found that two-thirds of the items were concepts in commonsense psychology.

Hobbs and Gordon’s new book is an in-depth, ambitious exploration of human commonsense reasoning.

“That gave us a target to work towards,” says Hobbs. “Then, we sat down to encode the knowledge that his study indicated we would need.”

The result is a collection of 1,400 axioms of first-order logic, or concepts, organized into 29 commonsense psychology theories and 16 background theories.

Topics include: knowledge management, memory, goals, plans, decisions, causes of failure, mind-body interaction, scheduling and emotions.

“We’re encoding the knowledge that a computer would need to have to participate in society, namely about people’s psychology,” says Hobbs. “How we think about and describe our own mental life, but we also how we define non-psychological concepts, like causality and time.”

Hobbs estimates that a computer would need to know and understand around 10 million of these facts, or axioms, in order to be able to mimic human-like intelligence.

“If we do the first 10,000 carefully by hand, we’ll have a lot of models for the computer to learn the rest automatically,” he says.

While it’s unlikely that robots would innately develop emotions, says Hobbs, it could be possible to simulate empathy and other feelings.

“Computers are not going to feel emotions, but they ought to be able to fake them; after all, we often do.” says Hobbs. “I do think one day we’ll be able to carry on good conversations with our computers.”

Published on September 7th, 2017

Last updated on May 20th, 2021