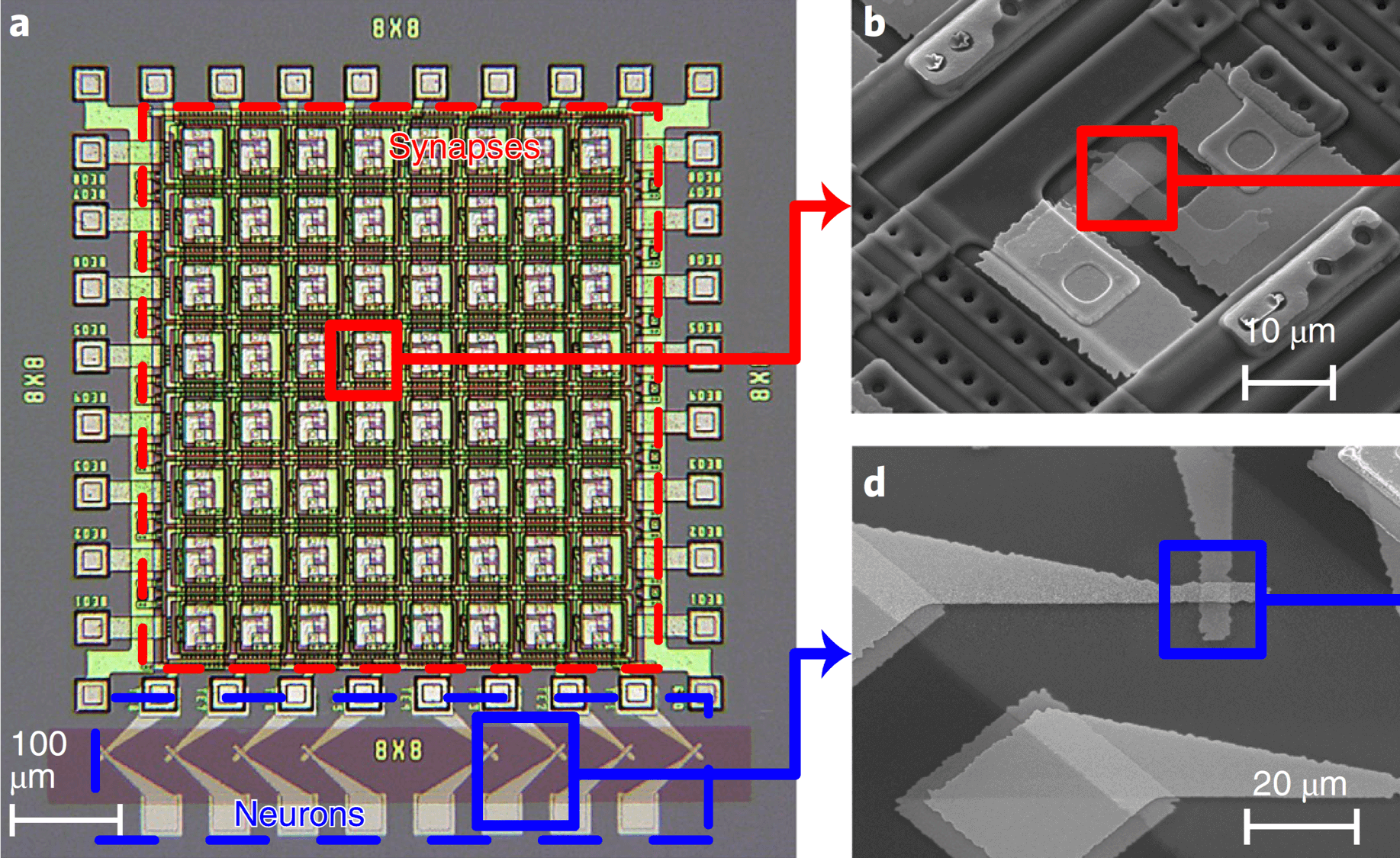

Neurons and synapses in a memristor neural network (PHOTO/Joshua Yang)

In 2016, AlphaGo became the first computer program to defeat a world champion in Go — a Chinese board game that requires multiple levels of strategic thinking. With an astonishing 10 to the power of 170 possible board configurations, the game of Go is a googol times more complex than chess, according to DeepMind, the Google-acquired artificial intelligence company that developed AlphaGo.

What is lesser known about AlphaGo is the prodigious extent of its energy consumption. Just 72 hours of gameplay can yield an overall power consumption equivalent to 12,760 human brains running together — and a princely electricity bill of about $3,000.

Engineers continue to build state-of-the-art technologies that can execute extraordinary tasks at a human-like level. The trade-off: inefficiencies because of their often large sizes and massive energy requirements. As we approach an increasingly big data age, the goal is to build electronic devices that power these brain-like computers to solve increasingly complex problems using the least amount of energy and physical space possible.

USC Viterbi’s Joshua Yang builds such devices.

Yang, a professor of electrophysics in the Ming Hsieh Department of Electrical and Computer Engineering, focuses on creating electronic devices that can compute at extremely high levels of intelligence and extremely low energy levels.

“Our current computing system, which is based on silicon technology, is powerful in some tasks. It just wasn’t designed to handle the huge amount of data we face in computing today,” Yang said. “In order to support a new architecture that is more efficient in processing big data, change needs to happen at the device level.”

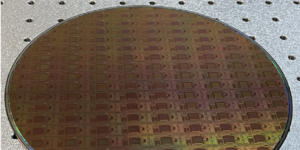

In the short term, Yang’s research will contribute to the development of computing systems to power smarter, faster, more energy-efficient machines such as robots that do all of your chores or self-driving cars. They will achieve this by fabricating electrical chips, based on devices called memristors, with increased memory and computing capabilities that will be embedded into the new computer systems.

“It wouldn’t be practical for a robot that folds your laundry to weigh three tons or run up your electricity bill tenfold,” Yang said. “You’d want it to separate your whites from your colors and your khakis from your jeans in two minutes.”

In the long term, with another flavor of memristors that can emulate synapses and neurons, Yang’s work will contribute to the research being done by other scientists and neurologists to better understand how the brain functions.

Yang’s research team comprises material scientists, computer architects, machine learning algorithm experts, and neuroscientists at USC. Together, they design and build brain-inspired computing systems, or neural networks, with special “neural processing units” to process video with orders of magnitude higher speed and lower energy consumption than the CPUs and GPUs our computers use today.

Neural networks are the closest adaptation to a human brain that computing has seen. Through extensive processes of trial and error, scientists can train them to model human behaviors by emulating the biological neural networks present in humans. In this process of so-called “supervised learning”, the neural network receives input like an image from a researcher, then produces either a correct or incorrect output. The researcher can then algorithmically modify the network to yield a different output, ideally a correct one. However, this is still fairly inefficient.

“We are designing computing systems that are smart enough to recreate this learning behavior unsupervised — that is, without the help of a researcher. Only a new kind of device can make this possible,” Yang said.

This device is called a memristor, which Yang and his research team build. It was theorized by Leon Ong Chua in 1971 as the 4th fundamental electronic component, after the resistor, the capacitor, and the inductor. What makes the memristor special is that its resistance can be programmed and subsequently stored as memory, even if the device loses power.

“Memristors are so small, more than 10,000 of them could fit on the diameter of one strand of hair,” Yang said. “With the ability to fit so many devices on such a small area, a lot of information can be stored.”

Because their resistance can be changed to store memory, memristors act like synapses that change connection patterns between the neurons in our brain when we receive new information. When combined, these devices produce a large array of data that form neural networks that will soon, without Yang’s help, learn on their own.

Yang’s research is funded by numerous government agencies and institutions including the National Science Foundation, the Defense Advanced Research Projects Agency, the Air Force Office of Scientific Research, the Intelligence Advanced Research Projects Activity and the Air Force Research Laboratory. He also receives funding from Hewlett-Packard Laboratories where he worked as a research scientist for six years before returning to academia.

“I really enjoy building tangible things that improve people’s quality of life, which is why I loved working in industry, but I missed the autonomy of academic research,” Yang said. That’s what led me to professorship at the University of Massachusetts Amherst and ultimately, USC Viterbi.”

Next semester, Yang will teach a course called “Post-CMOS Material and Devices,” where he will elaborate on intelligent materials and devices beyond silicon technologies and what is possible for the new age of efficient computing with them.

“Sharing ideas with brilliant engineering students is an exciting privilege, and I’m thrilled to be doing so at the esteemed USC Viterbi alongside renowned peers,” he said.

Published on November 4th, 2020

Last updated on April 8th, 2021